- Department of Medical Sciences, Faculty of Biology, Medicine and Health, University of Manchester, Manchester, United Kingdom

- Department of High-Dimensional Neurology, University College London, London, United Kingdom,

- Department of Computer Information Systems, De Anza College, Cupertino, United States,

- Department of Neurosurgery, Manchester Centre for Clinical Neurosciences, Salford Royal Hospital, Manchester, United Kingdom,

- Victor Horsley Department of Neurosurgery, National Hospital for Neurology and Neurosurgery, London, United Kingdom.

Correspondence Address:

Sayan Biswas, Faculty of Biology, Medicine and Health, University of Manchester, Manchester, United Kingdom.

DOI:10.25259/SNI_1086_2022

Copyright: © 2023 Surgical Neurology International This is an open-access article distributed under the terms of the Creative Commons Attribution-Non Commercial-Share Alike 4.0 License, which allows others to remix, transform, and build upon the work non-commercially, as long as the author is credited and the new creations are licensed under the identical terms.How to cite this article: Sayan Biswas1, Joshua Ian MacArthur1, Anand Pandit2, Lareyna McMenemy1, Ved Sarkar3, Helena Thompson1, Mohammad Saleem Saleemi4, Julian Chintzewen1, Zahra Rose Almansoor1, Xin Tian Chai1, Emily Hardman1, Christopher Torrie1, Maya Holt1, Thomas Hanna1, Aleksandra Sobieraj1, Ahmed Toma5, K. Joshi George4. Predicting neurosurgical referral outcomes in patients with chronic subdural hematomas using machine learning algorithms – A multi-center feasibility study. 20-Jan-2023;14:22

How to cite this URL: Sayan Biswas1, Joshua Ian MacArthur1, Anand Pandit2, Lareyna McMenemy1, Ved Sarkar3, Helena Thompson1, Mohammad Saleem Saleemi4, Julian Chintzewen1, Zahra Rose Almansoor1, Xin Tian Chai1, Emily Hardman1, Christopher Torrie1, Maya Holt1, Thomas Hanna1, Aleksandra Sobieraj1, Ahmed Toma5, K. Joshi George4. Predicting neurosurgical referral outcomes in patients with chronic subdural hematomas using machine learning algorithms – A multi-center feasibility study. 20-Jan-2023;14:22. Available from: https://surgicalneurologyint.com/?post_type=surgicalint_articles&p=12109

Abstract

Background: Chronic subdural hematoma (CSDH) incidence and referral rates to neurosurgery are increasing. Accurate and automated evidence-based referral decision-support tools that can triage referrals are required. Our objective was to explore the feasibility of machine learning (ML) algorithms in predicting the outcome of a CSDH referral made to neurosurgery and to examine their reliability on external validation.

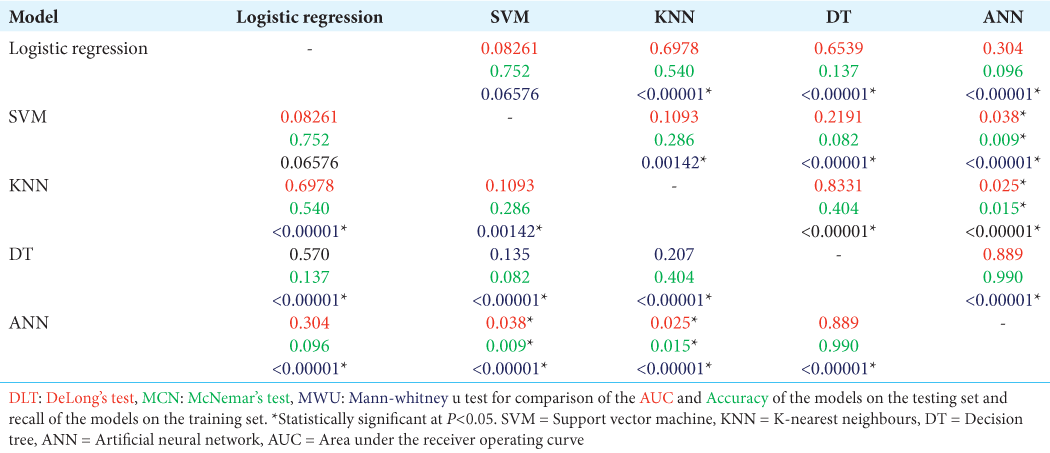

Methods: Multicenter retrospective case series conducted from 2015 to 2020, analyzing all CSDH patient referrals at two neurosurgical centers in the United Kingdom. 10 independent predictor variables were analyzed to predict the binary outcome of either accepting (for surgical treatment) or rejecting the CSDH referral with the aim of conservative management. 5 ML algorithms were developed and externally tested to determine the most reliable model for deployment.

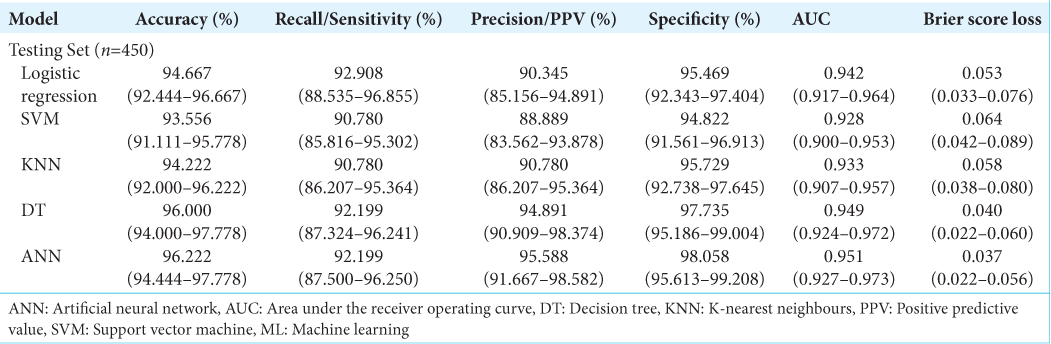

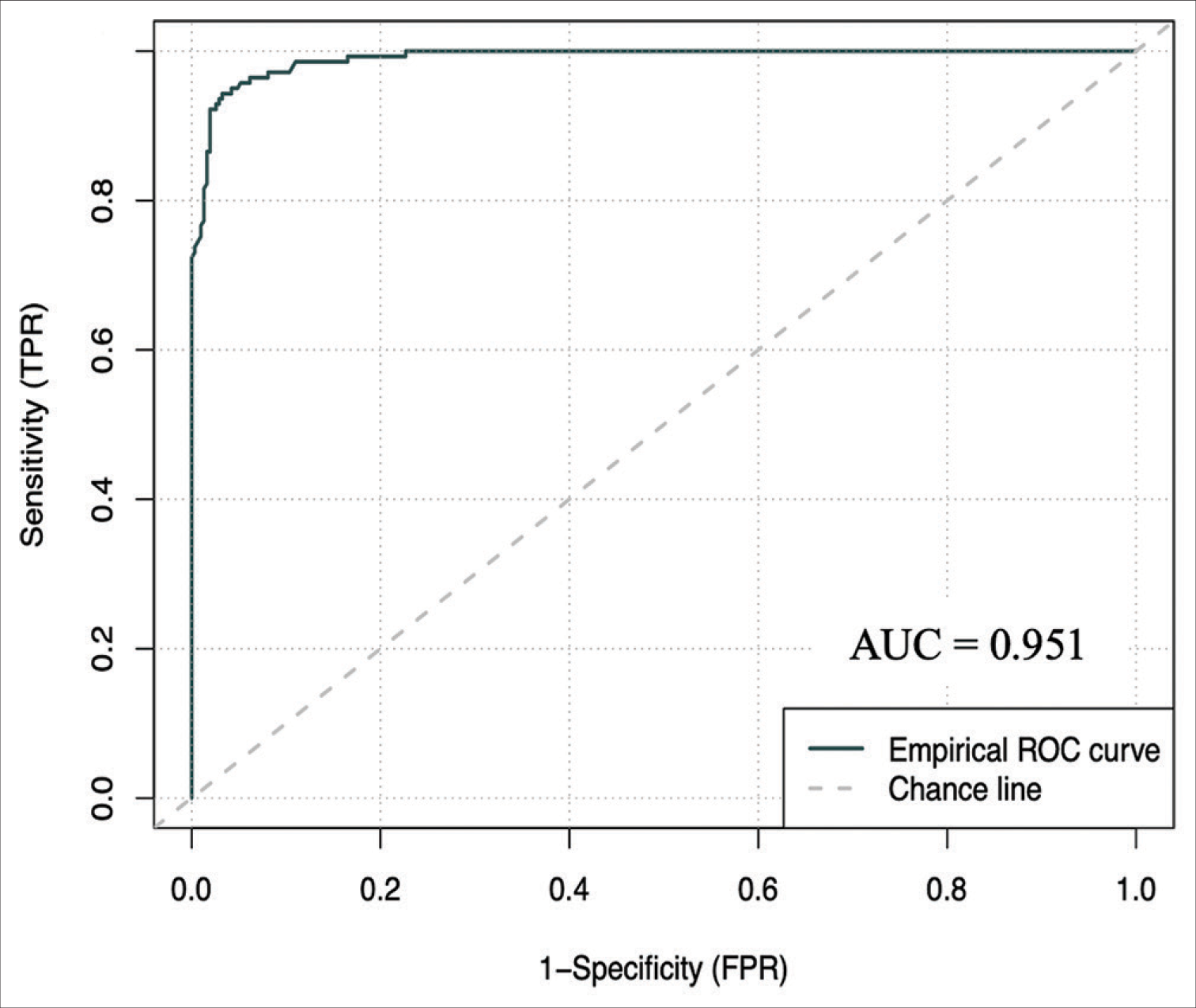

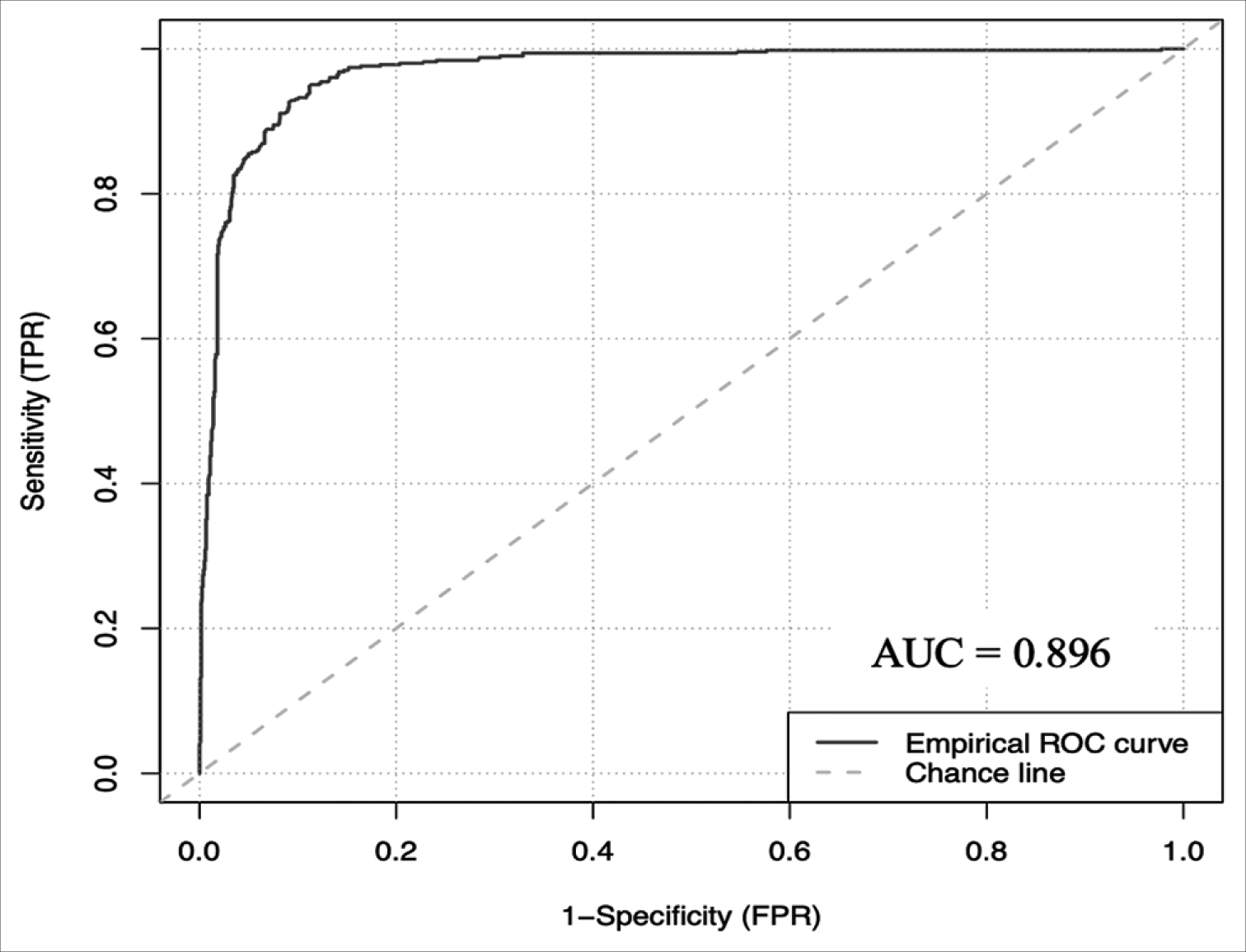

Results: 1500 referrals in the internal cohort were analyzed, with 70% being rejected referrals. On a holdout set of 450 patients, the artificial neural network demonstrated an accuracy of 96.222% (94.444–97.778), an area under the receiver operating curve (AUC) of 0.951 (0.927–0.973) and a brier score loss of 0.037 (0.022–0.056). On a 1713 external validation patient cohort, the model demonstrated an AUC of 0.896 (0.878–0.912) and an accuracy of 92.294% (90.952–93.520). This model is publicly deployed:

Conclusion: ML models can accurately predict referral outcomes and can potentially be used in clinical practice as CSDH referral decision making support tools. The growing demand in healthcare, combined with increasing digitization of health records raises the opportunity for ML algorithms to be used for decision making in complex clinical scenarios.

Keywords: Artificial intelligence, Chronic subdural hematoma, Prediction tool, Referral decision making

INTRODUCTION

Chronic subdural hematoma (CSDH) is a common neurosurgical condition with a high prevalence in the elderly population.[

In the UK health system, patients with CSDH mostly present to the outlying district hospitals rather than the regional tertiary neurosurgery center and will be transferred across to the neurosurgery center only if there is a likelihood of needing surgical intervention. A large number of referrals are rejected for transfer as most patients are either not suitable for neurosurgery or do not need neurosurgery. These referrals, however, take up significant resources for both the referring physician as well as the neurosurgical team, who are already both overburdened with other clinical work. The development of an evidence-based decision tool for predicting CSDH referral outcomes that is easily interpretable by healthcare professionals can therefore be helpful. Such a tool would not only make a significant impact on the logistics of running an on-call neurosurgical service but also enable junior trainees on-call and emergency departments to be more confident in making the decision to refer as well as accept based on the tool.

Machine learning (ML) is a rapidly developing field that can be applied to health-related outcome prediction models. ML algorithms can identify complex non-linear relationships between a set of features and an output, generating interpretable results in real time.[

The aim of this study was to create an ML model capable of replicating neurosurgical decision making and evaluate its reliability in predicting acceptance of CSDH referrals in two separate neurosurgical centers.

MATERIALS AND METHODS

Guidelines

The Transparent Reporting of Multivariable Prediction Models for Individual Prognosis or Diagnosis checklist and the JMIR Guidelines for Developing and Reporting ML Predictive Models in Biomedical Research were followed in our analysis.[

Data source and feature selection

The initial study was a single center retrospective analysis of all CSDH patient referrals to the local neurosurgery unit in Manchester from 2015 to 2020. The local trust’s neurosurgical referral database was examined and all CSDH referrals were exported in an anonymized manner for analysis. The exclusion criteria included any patients with missing data and those without a decision given at the time of initial referral. 16 neurosurgery consultants and 18 registrars were involved in the decision making of these CSDH referrals. A total of 1500 referrals were identified, of which 450 referrals were accepted referrals and the remaining 1050 were rejected referrals. These 1500 patient referrals were used for the development and validation of the models. Patient consent was not required as the study was conducted in an anonymized and retrospective manner. The study was approved by the local hospital’s research and innovation board, reference number: 22HIP11.

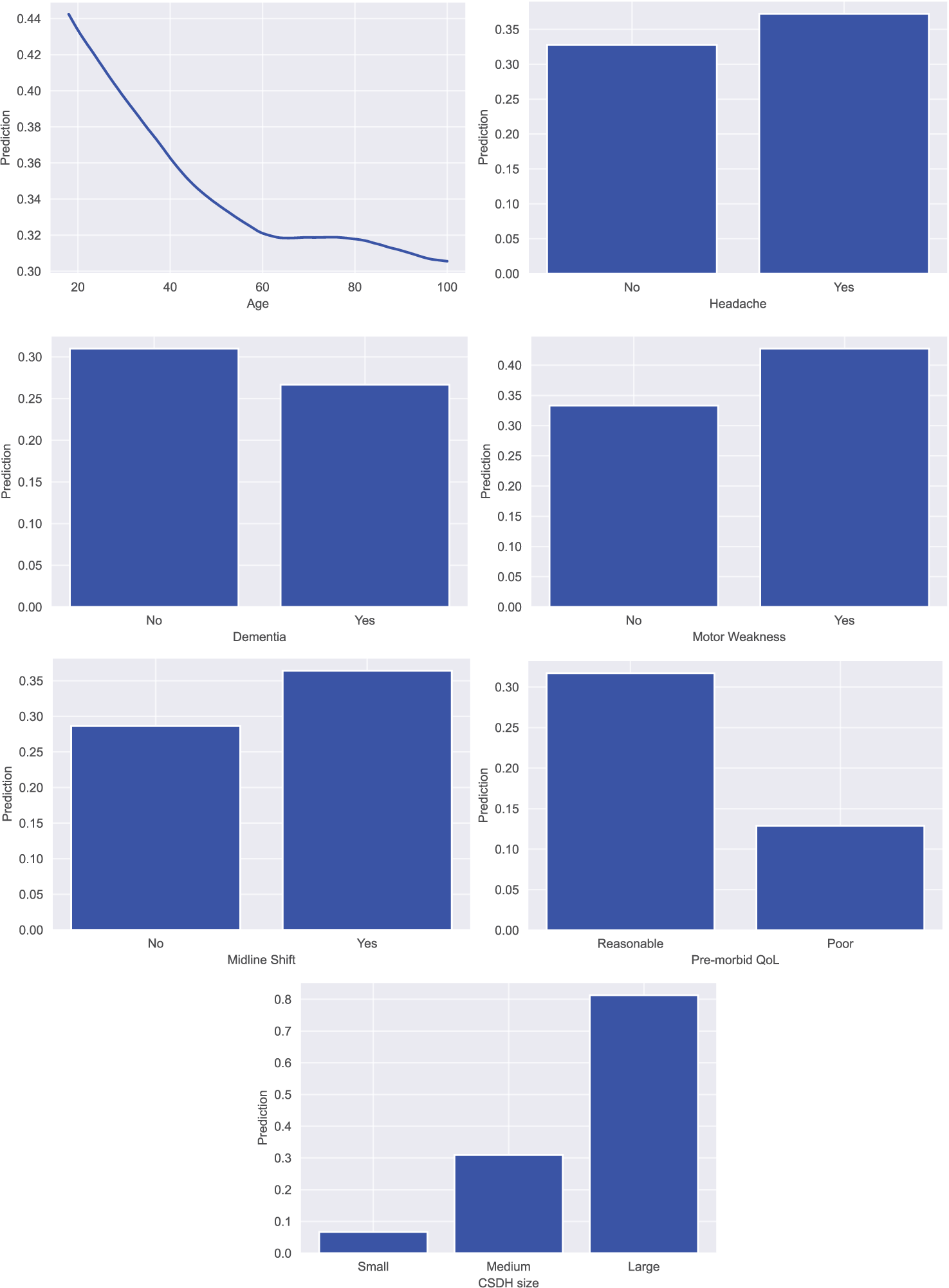

Ten predictor variables were identified on the referral database and the relevant information was extracted at the point of first referral: age (continuous), sex of the patient (male or female), GCS of the patient at referral (discrete continuous), presence of headache (yes or no), dementia (yes or no), motor weakness (yes or no), midline shift (yes or no), the size of the CSDH (small, medium or large; determined from radiological reports), the patient’s pre-morbid quality of life (QoL) (poor or reasonable), and their anti-coagulation status (yes or no). The presence of midline shift on radiological scans was based on the referring neuro-radiology report and the hematoma sizes were defined using the maximal hematoma thickness; with hematomas <1 cm being considered small, 1–2 cm as medium and >2 cm as large. Poor pre-morbid QoL was defined as patients requiring full time care or those classified as an American Society of Anesthesiologists Grade 4, with all other patients classified as having a reasonable pre-morbid QoL. The binary outcome variable of the model was the acceptance or rejection of a CSDH referral, with acceptance defined as admitting the patient to the neurosurgery center for assessment with the aim of proceeding to urgent surgical intervention. Predictor variable selection was conducted through stepwise multivariable logistic regression analysis and recursive feature elimination (RFE) using a stratified 4-fold cross validation with 4 repeats to determine the optimal number of variables employed in the ML models.

Model analysis

A stratified 70:30 train – test split was carried out on the total cohort of 1500 patient referrals, with 1050 data points utilized for training the models. All continuous data were centered to zero mean and scaled to unit variance while all categorical data were dummy coded. 6 ML models were created; 5 supervised learning algorithms: Logistic regression model, Support Vector Machine (SVM), K-nearest neighbors (KNN), Decision Tree (DT) and 1 deep learning multi-level perceptron artificial neural network (ANN) framework. Model hyperparameters were optimized through an iterative process that calibrated the weight estimations for each model based on their best yield for accuracy.

The models were trained on 4-fold stratified K-fold cross validation with 100 repeats on the training dataset. In stratified K-fold cross-validation, the class ratio for each fold is equivalent to the class ratio of the original dataset for each variable.[

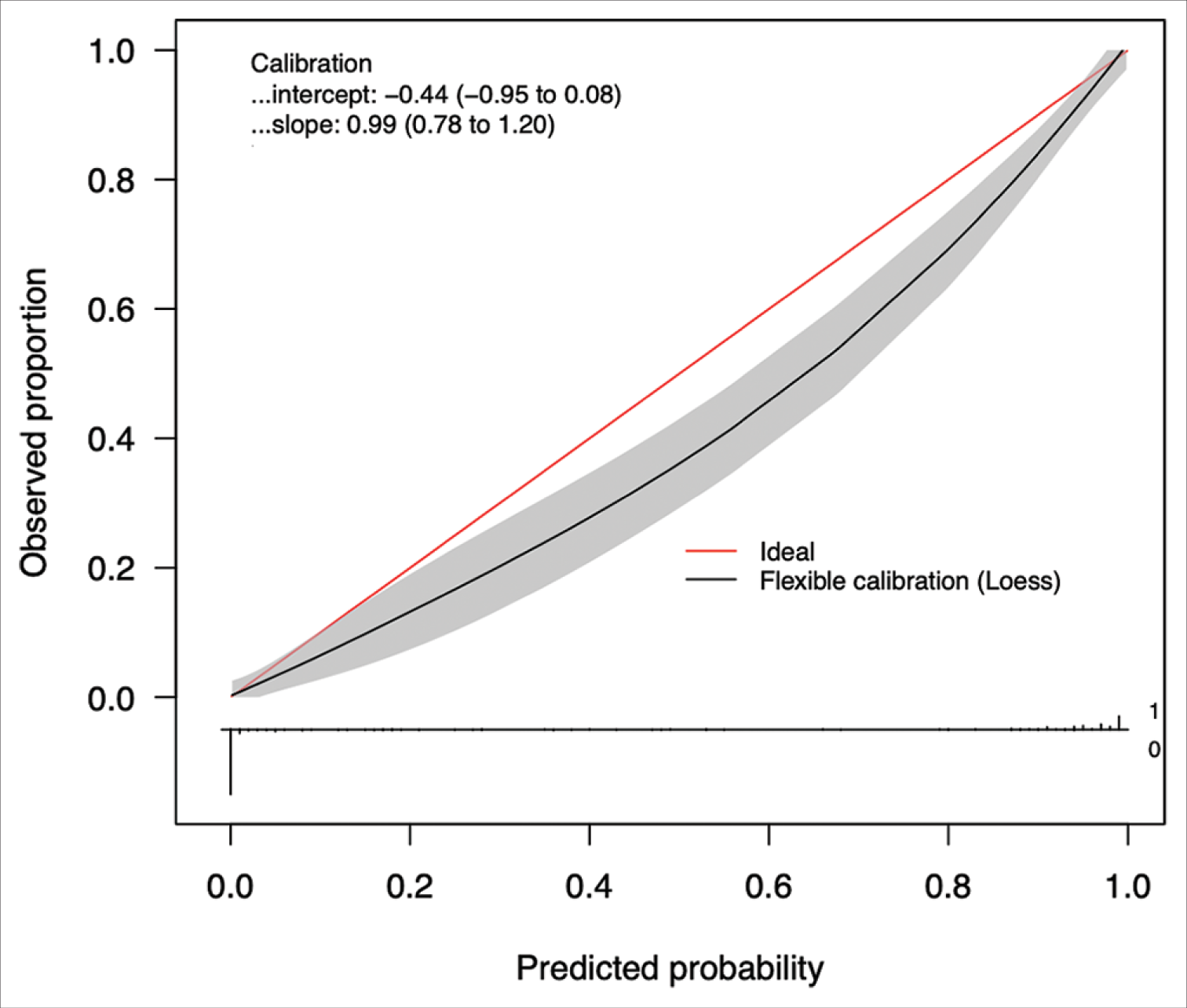

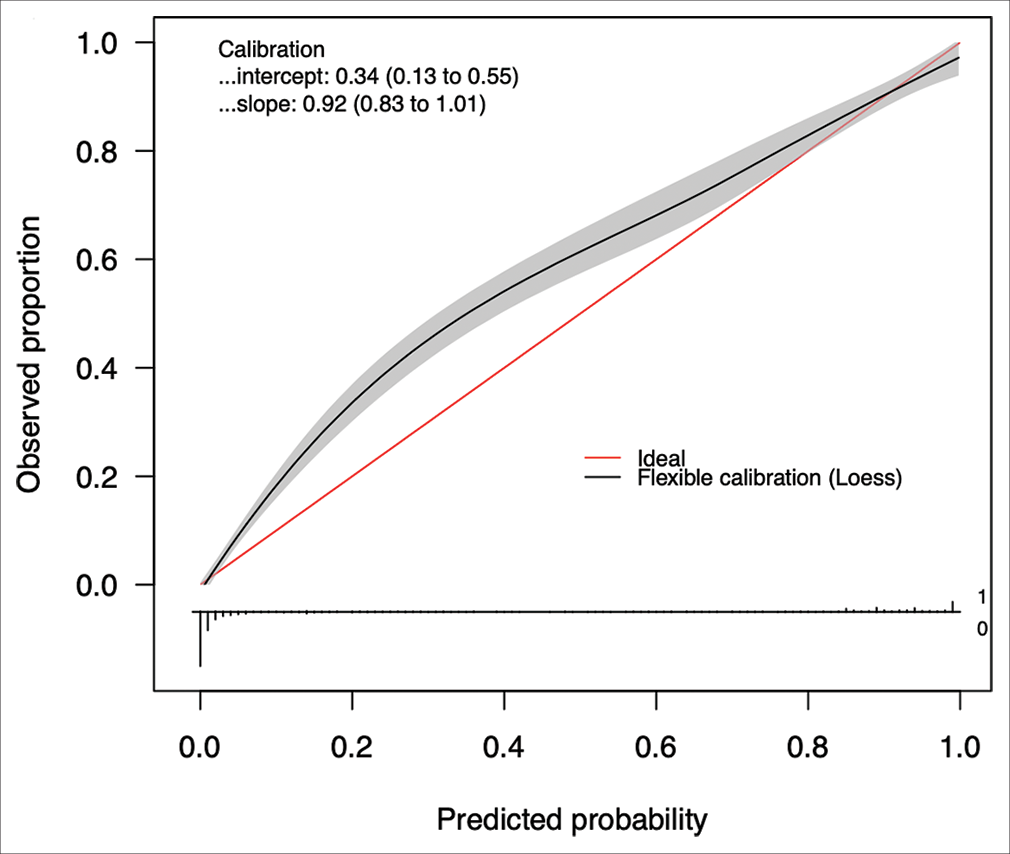

The best performing model was then calibrated on the testing test. Calibration is a measure of the consistency between the model’s predicted probabilities and the true observed probabilities in the study population. It is depicted as a calibration curve, that is ideally a 45−° line through the origin, with a slope of 1 (an assessment of the spread of the estimated probabilities/risk compared to the observed probabilities) and an intercept of 0 (measure of the model’s tendency of under-estimating (<0) or over-estimating (more than 0) the true probability of the dataset).[

Model agnostic interpretation was conducted on the trained models through partial dependence plots (PDPs) and feature importance calculations. PDPs helped visualized the impact of a predictor variable on the outcome of the model by marginalizing over all the values of the input variable.[

All ML analysis was performed using the R coding language version 3.4.3 (The R Foundation, Vienna, Austria), Python coding language (version 3.8, Python Software Foundation, Wilmington, Delaware) in an Anaconda virtual environment (Anaconda Inc., Austin, Texas), using TensorFlow 2.1 for deep learning,[

External validation

A retrospective analysis was conducted of all CSDH patients referred to a neurosurgical center in London, to encompass a larger, more heterogenous patient population, spanning 5 years from 2015 to 2020. A total of 2200 patient referrals were identified but only 1713 were selected for analysis following removal of duplicates and missing/inappropriate data. Of the 1713 referrals identified 505 were accepted referrals and 1204 were rejected referrals. These 1713 patient referrals were used for external validation of the optimal ML model.

Application deployment

The optimal ML model was incorporated into a natively developed interactive web application, that is, publicly deployed and is optimized for use on all major desktops, tablets, and mobile phones. This open access clinical tool will allow primary and secondary care health-care professionals from all over the world to input in values into the pre-trained model and retrieve an output in real time.

RESULTS

Baseline patient characteristics

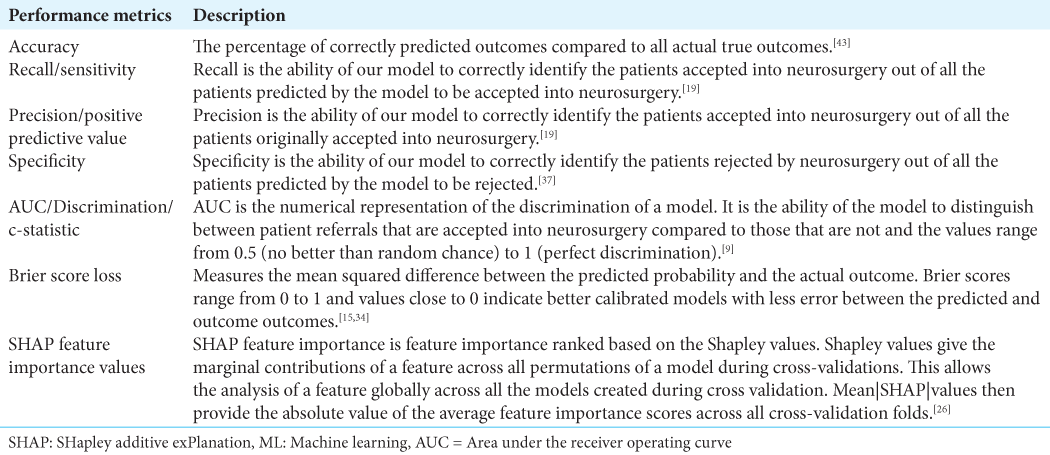

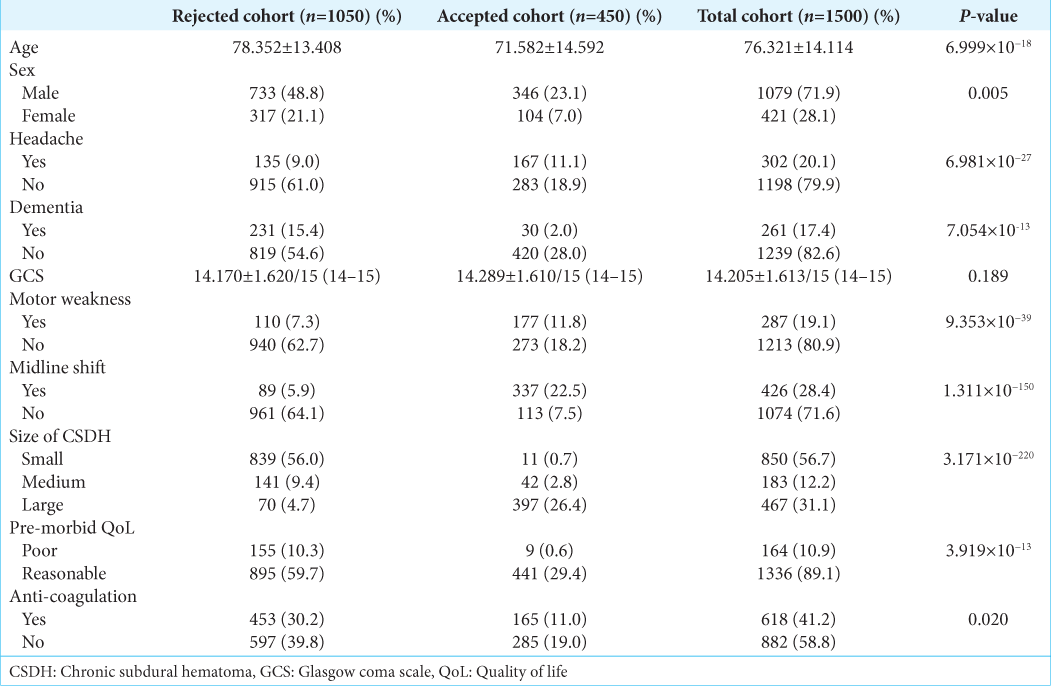

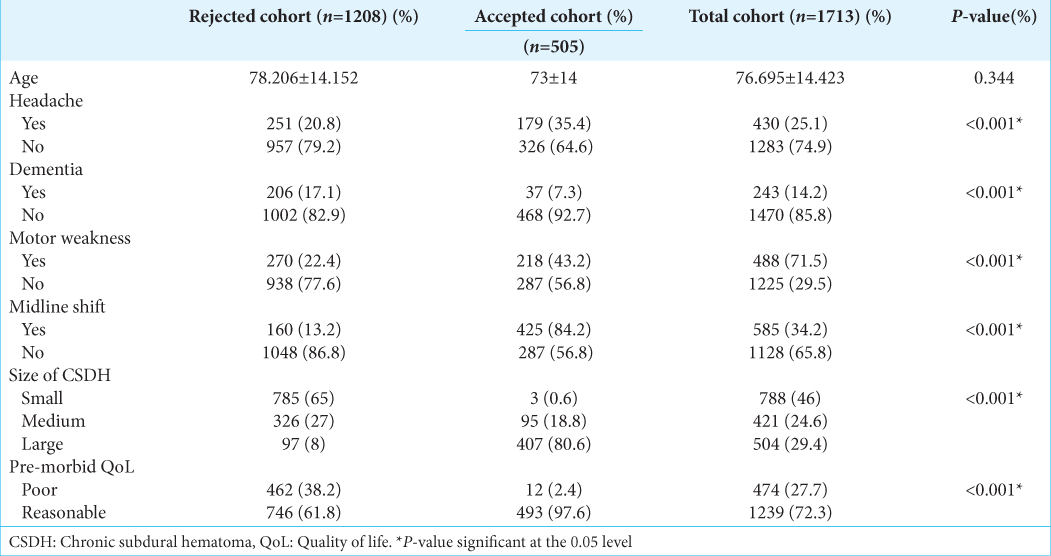

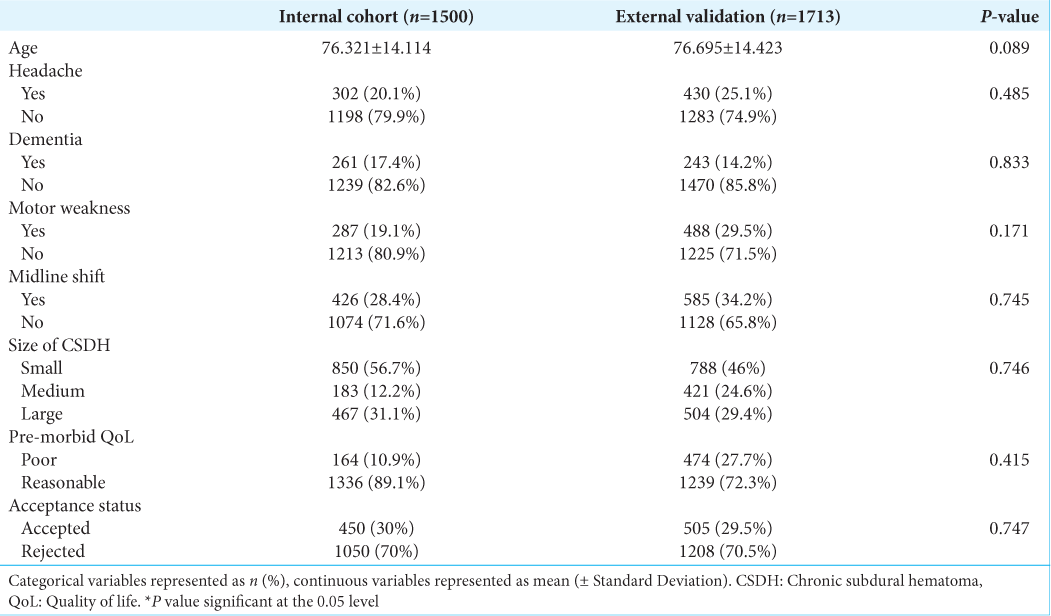

The average age of the cohort was 76.321 ± 14.114 years and 71.9% of the patients were male. Detailed cohort demographic information for this study is summarized in

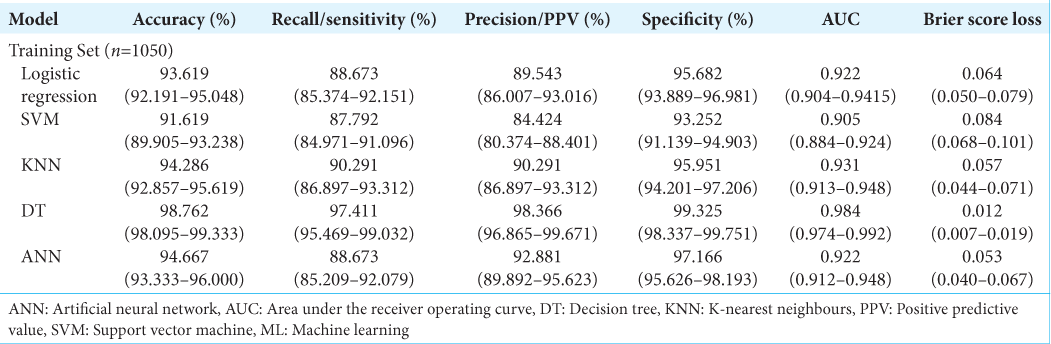

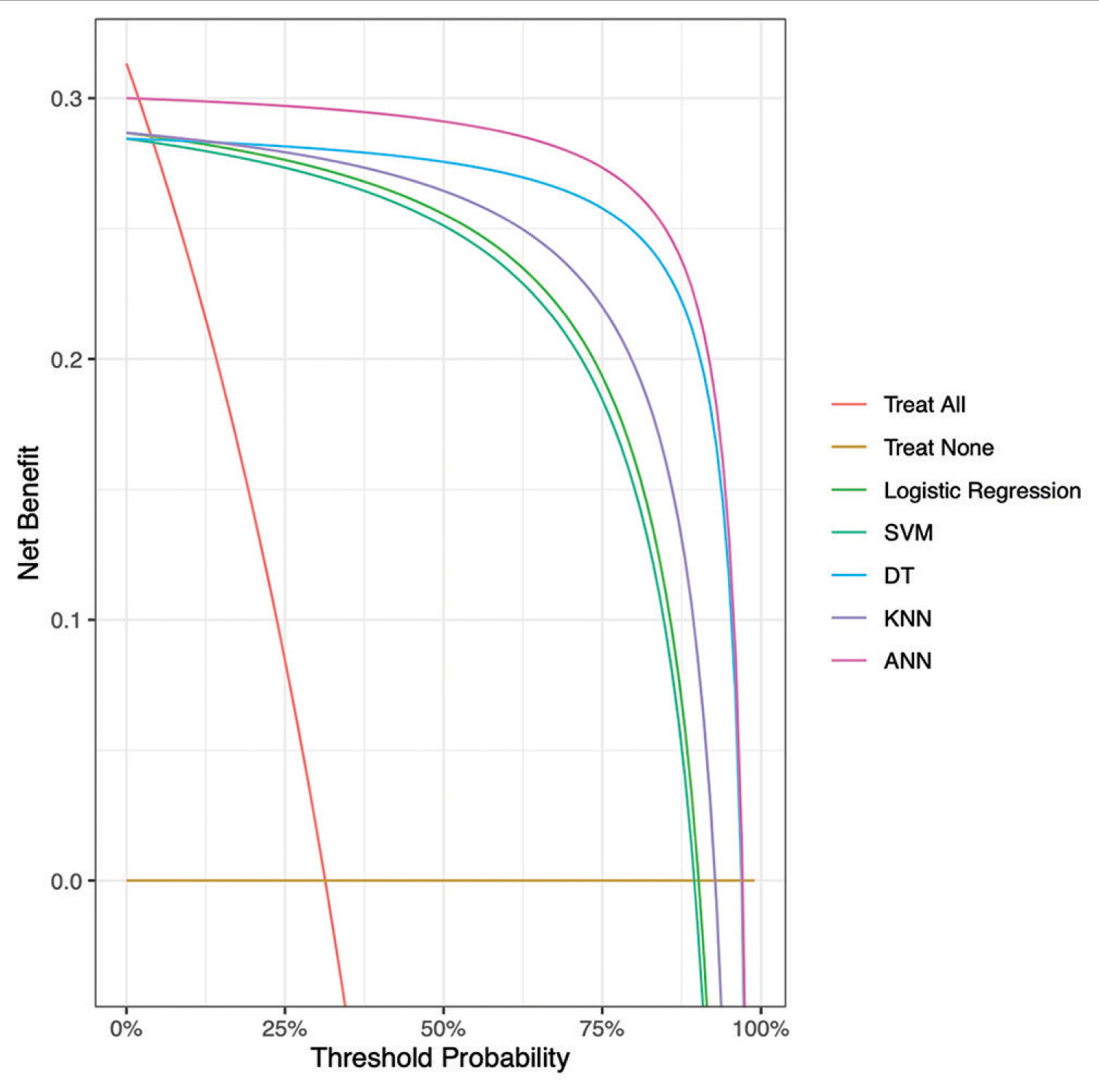

Model performance

Figure 2:

Decision curve analysis comparing expected clinical net benefit of the 5 different machine learning models on the testing set. SVM = Support Vector Machine, KNN = K-nearest neighbours, DT = Decision Tree, ANN = Artificial Neural Network, ANCHOR = Artificial Neural network for Chronic subdural HematOma Referral outcome prediction.

External validation of ANCHOR

The average age of the London cohort was 76.695 ± 14.423 years. Detailed baseline characteristics of the external validation patient cohort are summarized in

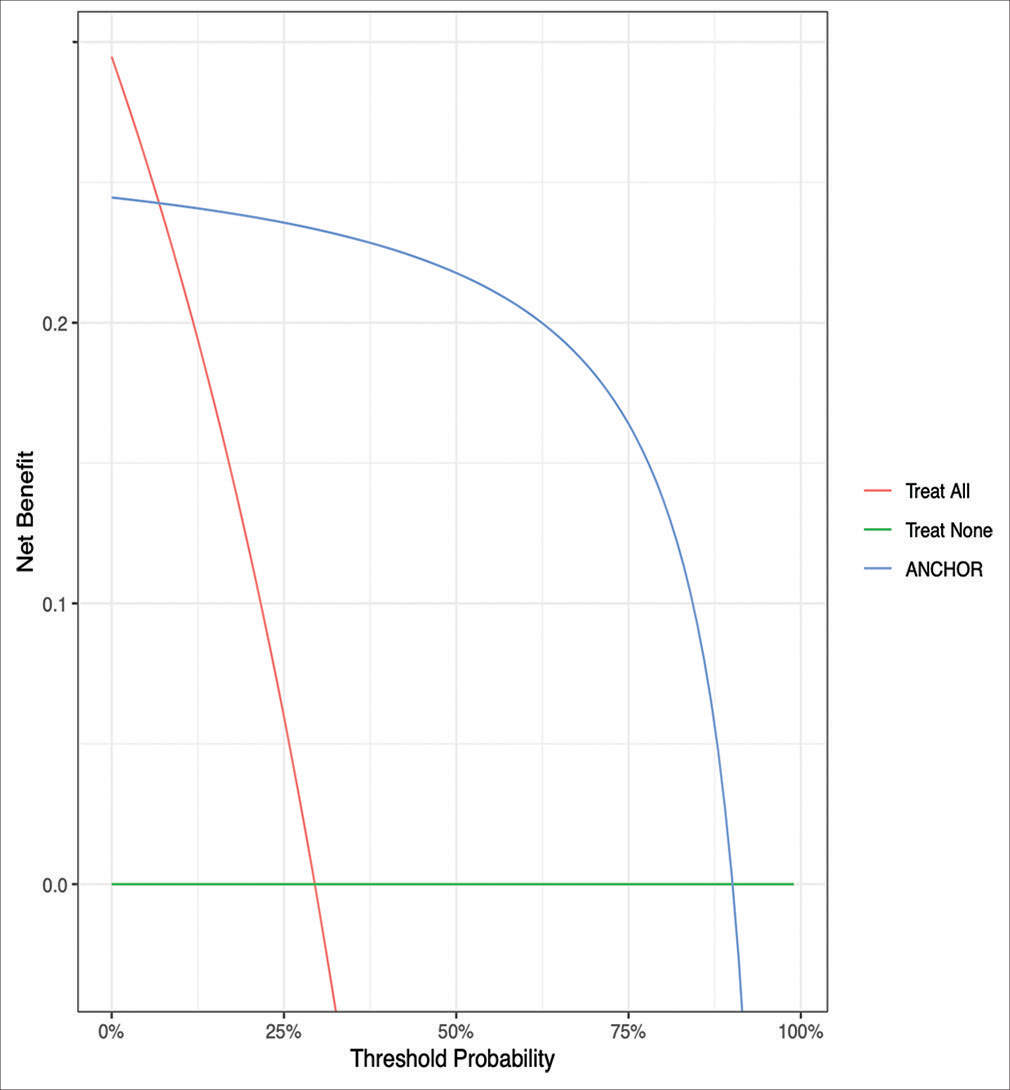

The ANCHOR ML model demonstrated good discriminative ability, calibration, overall performance, and accuracy on this external validation cohort of 1713 CSDH patient referrals from London. On external validation, the algorithm had an AUC of 0.896 (95% CI: 0.878–0.912) [

Figure 5:

Decision curve analysis comparing expected clinical net benefit of the 5 different machine learning models on the external validation set. SVM = Support Vector Machine, KNN = K-nearest neighbours, DT = Decision Tree, ANN = Artificial Neural Network, ANCHOR = Artificial Neural network for Chronic subdural HematOma Referral outcome prediction.

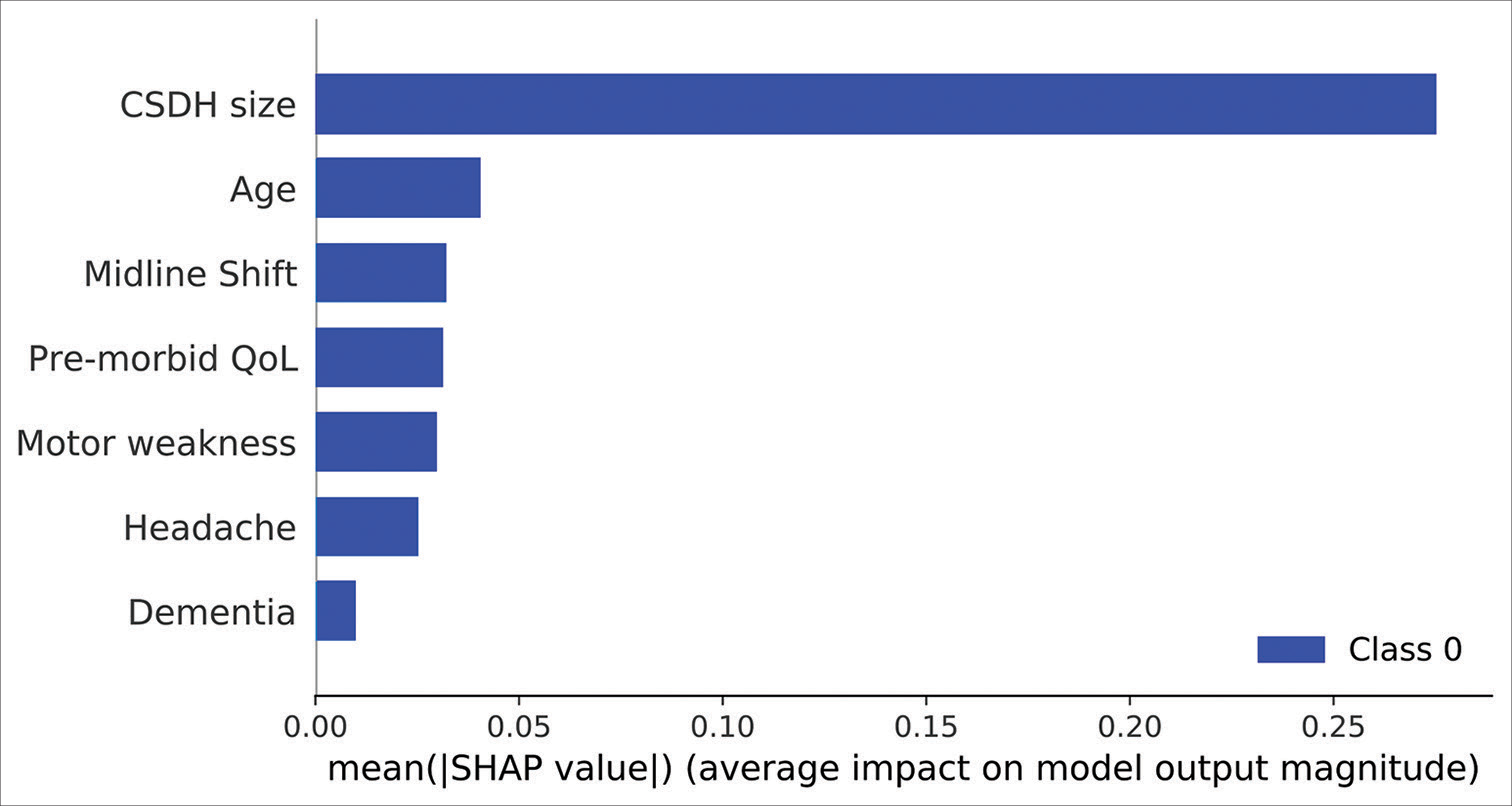

Feature analysis

RFE analysis also revealed that the 7 aforementioned predictor variables were necessary to achieve the highest average accuracy. Model agnostic SHAP feature importance calculations revealed the size of the CSDH to be the single most important predictor of referral acceptance, with a mean SHAP value of 0.30. Please refer to

Figure 7:

SHAP feature importance scores of the 7 predictor variables in the artificial neural network model. Mean |SHAP| values provide the absolute value of the average feature importance scores across all cross-validation folds. SHAP: SHapley Additive exPlanation values. CSDH: Chronic subdural hematoma, QoL: Quality of Life.

DISCUSSION

To the best of our knowledge, this is the first study that has analyzed the CSHD referral acceptance process through ML algorithms. The increasing rate of CSDH incidence and referrals compounded with the lack of objective clinical reasoning guidelines, necessitates the need for a more accurate and efficient referral decision-making process, established on evidence-based predictions that are reliable, consistent and can take place in real time, making this process an ideal candidate for ML modeling. This study thus evaluated the utility and efficacy of multiple ML models in predicting the outcome of a CSDH patient referral. The best performing model was validated on an external cohort successfully demonstrating excellent accuracy in predicting CSDH referral outcome. Used as a clinical triage and decision support tool, our model could thus ensure that those most likely to need surgical intervention are assessed first.

We report that our ANN model demonstrated the best overall discriminatory ability with good calibration upon validation of unseen data points. Such conventional black box models are well suited for analyzing complex non-linear relationships between predictor and outcome variables. However, they suffer from a lack of explain ability and interpretability. Abouzari et al. have previously demonstrated the superior efficacy of ANNs compared to logistic regression ML models in outcome prediction in CSDH patients; however, their study suffered from poor explain ability and had a low sample size for training and validating the model.[

CSDH referral decision making process

We observed that the single most important predictive factor of acceptance was the size of the hematoma. Multiple studies have previously demonstrated the negative prognostic impact of subdural hematoma size on the recurrence and post-operative outcomes of patients.[

The findings of this study also re-enforce results previously demonstrated, regarding the non-significant predictors of referral acceptance. Brennan et al. have shown no significant differences in the GCS scores between transferred and non-transferred patients, with majority of patients having a GCS score of 13–15.[

Goals of ANCHOR

Across the UK’s two largest tertiary neurosurgical centers that provide care to a large and diverse population of patients, we have demonstrated the capability of ANCHOR as a decision making adjunct within the health-care framework of the UK.

Focus was placed on the transparency, interpretability and explain ability of the model for both patients and physicians. Such methods allow patients to understand what is happening and why the decision to accept or reject their referral is being made. In addition, these features combined with the real time output of referral outcome allow for faster and safer transmission of information across all involved medical professionals. This tool can also be useful to the junior physicians in training, who can check whether their initial management plan is sound. At present, acceptance of CSDH referrals to neurosurgery is a decision influenced by an individual clinician’s experience and is based on the impact of patient variables such as age, size of the subdural hematoma, and neurological deficit.[

Finally, the UK NHS is currently undergoing a digital health-care revolution, with a focus on the creation, distribution and enhancement of reliable health-care systems and research pipelines using information technology and AI.[

Limitations

Despite these results, our study has a few limitations. First, the decision of the consultant neurosurgeon was assumed to be correct and no follow-up data were collected on the outcomes of these decisions. Second, the predictor variables included in this analysis are not exhaustive. Factors such as race, ethnicity, co-morbidities (diabetes, cardiovascular disease, etc.), the presence of previous hematomas, volumetric radiological hematoma appearance, and other neurological symptoms such as ataxia and hemisensory loss were not assessed. These variables can possibly influence the outcomes of the referrals. Third, while our model demonstrated excellent accuracy across our internal and external cohorts, the results cannot be generalized to the CSDH patient population worldwide. Countries with a different funding model of healthcare and less centralization of neurosurgical services may not find this tool helpful. Therefore, external validation in multiple international tertiary centers in differing healthcare settings is thus necessary to safely employ the model in clinical practice. Despite the differences in neurosurgical practices worldwide, there is however a global applicability of such a publicly available ML model as it can be used by non-specialist clinicians who encounter a CSDH and are debating whether they need to refer this onto neurosurgery. In addition, our work shows that if there is good data already available on the referrals, a ML algorithm can be built specifically for that country or region to aid the management of this condition. Fourth, the small incongruities in our model’s calibration on the internal and external datasets can be explained by the cumulative yet non-significant differences in the distribution of patient variables. These changes may represent a “gray zone” of variable clinical presentations that require more in-depth individualistic evaluation and thus, may not be well evaluated by our ML model. This suggests that further prospective training and enhancement are required before this tool is used in routine clinical practice.

CONCLUSION

This is the first study to evaluate the use of ML algorithms in deciding the outcome of a referral for CSDH and demonstrates that implementation of accurate and reliable ML algorithms as decision support tools is feasible and can potentially be used in conjunction to current clinical practice. In addition, the study highlights which variables influence clinical decision making the most. These findings can help facilitate the CSDH clinical referral decision making process and have the potential to significantly enhance patient care and physician education.

Declaration of patient consent

Patients’ consent not required as patients’ identities were not disclosed or compromised.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of the Journal or its management. The information contained in this article should not be considered to be medical advice; patients should consult their own physicians for advice as to their specific medical needs.

Acknowledgments

The authors wish to thank: (1) Callum Tetlow, Data Scientist, at Northern Care Alliance, for statistical and computational input and analysis and (2) the department of neurosurgery at Salford Royal Hospital for providing the data.

References

1. Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. arXiv. 2016. 2: 1-19

2. Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, editors. TensorFlow: A System for Large-scale Machine Learning. Open Access to the Proceedings of the USENIX Symposium on Operating. Systems Design and Implementation. Berkeley: USENIX Association; 2016. p. 265-83

3. Abouzari M, Rashidi A, Zandi-Toghani M, Behzadi M, Asadollahi M. Chronic subdural hematoma outcome prediction using logistic regression and an artificial neural network. Neurosurg Rev. 2009. 32: 479-84

4. Adhiyaman V, Chattopadhyay I, Irshad F, Curran D, Abraham S. Increasing incidence of chronic subdural haematoma in the elderly. QJM. 2017. 110: 375-8

5. Asghar M, Adhiyaman V, Greenway MW, Bhowmick BK, Bates A. Chronic subdural haematoma in the elderly--a North Wales experience. J R Soc Med. 2002. 95: 290-2

6. Aspegren OP, Åstrand R, Lundgren MI, Romner B. Anticoagulation therapy a risk factor for the development of chronic subdural hematoma. Clin Neurol Neurosurg. 2013. 115: 981-4

7. Berrar D, editors. Cross-validation. Encyclopedia of Bioinformatics and Computational Biology. Netherlands: Elsevier; 2019. p. 542-5

8. Biswas S, MacArthur J, Sarkar V, Thompson H, Saleemi M, George KJ, editors. Development and validation of the chronic subdural hematoma referral outcome prediction using statistics (CHORUS) score: A retrospective study at a national tertiary centre. World Neurosurg. 2022. p. In Press:S1878875022016497

9. Bradley AP. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997. 30: 1145-59

10. Brennan PM, Kolias AG, Joannides AJ, Shapey J, Marcus HJ, Gregson BA. The management and outcome for patients with chronic subdural hematoma: A prospective, multicenter, observational cohort study in the United Kingdom. J Neurosurg. 2017. 127: 732-9

11. Buitinck L, Louppe G, Blondel M, Pedregosa F, Mueller A, Grisel O, editors. API Design for Machine Learning Software: Experiences from the Scikit-learn Project. European Conference on Machine Learning and Principles and Practices of Knowledge Discovery in Databases. 2013. p.

12. Collins GS, Reitsma JB, Altman DG, Moons K. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMC Med. 2015. 13: 1

13. Cresswell K, Williams R, Sheikh A. Bridging the growing digital divide between NHS England’s hospitals. J R Soc Med. 2021. 114: 111-2

14. Foelholm R, Waltimo O. Epidemiology of chronic subdural haematoma. Acta Neurochir (Wien). 1975. 32: 247-50

15. Gneiting T, Raftery AE. Strictly proper scoring rules, prediction, and estimation. J Am Stat Assoc. 2007. 102: 359-78

16. Greenwell BM, Boehmke BC, McCarthy AJ. A Simple and Effective Model-Based Variable Importance Measure. ArXiv Stat ML. 2018. 1: 1-27

17. de Hond AA, Leeuwenberg AM, Hooft L, Kant IM, Nijman SW, van Os HJ. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: A scoping review. NPJ Digit Med. 2022. 5: 2

18. Joshi I, Morley J, editors. Artificial Intelligence: How to get it Right. Putting Policy into Practive for Safe Data-driven Innovation in Health and Care. London, United Kingdom: NHSX; 2019. p. 6-106

19. Junker M, Hoch R, Dengel A, editors. On the Evaluation of Document Analysis Components by Recall, Precision, and Accuracy, Proceedings of the Fifth International Conference on Document Analysis and Recognition. ICDAR ’99 (Cat. No.PR00318). Bangalore, India: IEEE; 1999. p. 713-6

20. Kellogg RT, Vargas J, Barros G, Sen R, Bass D, Mason JR. Segmentation of chronic subdural hematomas using 3D convolutional neural networks. World Neurosurg. 2021. 148: e58-65

21. Kohavi R, editors. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. Proceedings of the 14th International Joint Conference on Artificial Intelligence Vol. 2. IJCAI’95. San Francisco, CA: Morgan Kaufmann Publishers Inc; 1995. p. 1137-43

22. Kolias AG, Chari A, Santarius T, Hutchinson PJ. Chronic subdural haematoma: Modern management and emerging therapies. Nat Rev Neurol. 2014. 10: 570-8

23. Kudo H, Kuwamura K, Izawa I, Sawa H, Tamaki N. Chronic subdural hematoma in elderly people: Present status on Awaji Island and epidemiological prospect. Neurol Med Chir (Tokyo). 1992. 32: 207-9

24. Leroy HA, Aboukaïs R, Reyns N, Bourgeois P, Labreuche J, Duhamel A. Predictors of functional outcomes and recurrence of chronic subdural hematomas. J Clin Neurosci. 2015. 22: 1895-900

25. Luo W, Phung D, Tran T, Gupta S, Rana S, Karmakar C. Guidelines for developing and reporting machine learning predictive models in biomedical research: A multidisciplinary view. J Med Internet Res. 2016. 18: e323

26. Marcilio WE, Eler DM, editors. From Explanations to Feature Selection: Assessing SHAP Values as Feature Selection Mechanism. 2020 33rd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI). Brazil: IEEE: Recife/Porto de Galinhas; 2020. p. 340-7

27. Miah IP, Tank Y, Rosendaal FR, Peul WC, Dammers R, Lingsma HF. Radiological prognostic factors of chronic subdural hematoma recurrence: A systematic review and meta-analysis. Neuroradiology. 2021. 63: 27-40

28. Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O. Scikit-learn: Machine learning in python. J Mach Learn Res. 2011. 12: 2825-30

29. Rauhala M, Luoto TM, Huhtala H, Iverson GL, Niskakangas T, Öhman J. The incidence of chronic subdural hematomas from 1990 to 2015 in a defined Finnish population. J Neurosurg. 2020. 132: 1147-57

30. Rust T, Kiemer N, Erasmus A. Chronic subdural haematomas and anticoagulation or anti-thrombotic therapy. J Clin Neurosci. 2006. 13: 823-7

31. Santarius T, Kirkpatrick PJ, Kolias AG, Hutchinson PJ. Working toward rational and evidence-based treatment of chronic subdural hematoma. Clin Neurosurg. 2010. 57: 112-22

32. Sharma R, Rocha E, Pasi M, Lee H, Patel A, Singhal AB. Subdural hematoma: Predictors of outcome and a score to guide surgical decision-making. J Stroke Cerebrovasc Dis. 2020. 29: 105180

33. Sidey-Gibbons JA, Sidey-Gibbons CJ. Machine learning in medicine: A practical introduction. BMC Med Res Methodol. 2019. 19: 64

34. Steyerberg EW, Vergouwe Y. Towards better clinical prediction models: Seven steps for development and an ABCD for validation. Eur Heart J. 2014. 35: 1925-31

35. Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, Obuchowski N. Assessing the performance of prediction models: A framework for traditional and novel measures. Epidemiol Camb Mass. 2010. 21: 128-38

36. Toi H, Kinoshita K, Hirai S, Takai H, Hara K, Matsushita N. Present epidemiology of chronic subdural hematoma in Japan: Analysis of 63,358 cases recorded in a national administrative database. J Neurosurg. 2018. 128: 222-8

37. Trevethan R. Sensitivity, specificity, and predictive values: Foundations, pliabilities, and pitfalls in research and practice. Front Public Health. 2017. 5: 307

38. Uno M, Toi H, Hirai S. Chronic Subdural Hematoma in Elderly Patients: Is this Disease Benign?. Neurol Med Chir (Tokyo). 2017. 57: 402-9

39. Van Calster B, McLernon DJ, van Smeden M, Wynants L, Steyerberg EW. Calibration: The Achilles heel of predictive analytics. BMC Med. 2019. 17: 230

40. Vickers AJ, van Calster B, Steyerberg EW. A simple, step-by-step guide to interpreting decision curve analysis. Diagn Progn Res. 2019. 3: 18

41. Wang H, Zhang M, Zheng H, Xia X, Luo K, Guo F. The effects of antithrombotic drugs on the recurrence and mortality in patients with chronic subdural hematoma: A meta-analysis. Medicine (Baltimore). 2019. 98: e13972

42. Yamamoto H, Hirashima Y, Hamada H, Hayashi N, Origasa H, Endo S. Independent predictors of recurrence of chronic subdural hematoma: Results of multivariate analysis performed using a logistic regression model. J Neurosurg. 2003. 98: 1217-21

43. Yin M, Vaughan JW, Wallach H, editors. Understanding the Effect of Accuracy on Trust in Machine Learning Models. Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. Glasgow Scotland UK: ACM; 2019. p. 1-12