- Department of Neurosurgery, Barrow Neurological Institute, Phoenix, Arizona, United States,

- Department of Neurosurgery, Hacettepe University, Ankara, Turkey.

Correspondence Address:

Mark C. Preul, Department of Neurosurgery, Barrow Neurological Institute, Phoenix, Arizona, United States.

DOI:10.25259/SNI_162_2023

Copyright: © 2023 Surgical Neurology International This is an open-access article distributed under the terms of the Creative Commons Attribution-Non Commercial-Share Alike 4.0 License, which allows others to remix, transform, and build upon the work non-commercially, as long as the author is credited and the new creations are licensed under the identical terms.How to cite this article: Nicolas I. Gonzalez-Romo1, Giancarlo Mignucci-Jiménez1, Sahin Hanalioglu1,2, Muhammet Enes Gurses1,2, Siyar Bahadir2, Yuan Xu1, Grant Koskay1, Michael T. Lawton1, Mark C. Preul1. Virtual neurosurgery anatomy laboratory: A collaborative and remote education experience in the metaverse. 17-Mar-2023;14:90

How to cite this URL: Nicolas I. Gonzalez-Romo1, Giancarlo Mignucci-Jiménez1, Sahin Hanalioglu1,2, Muhammet Enes Gurses1,2, Siyar Bahadir2, Yuan Xu1, Grant Koskay1, Michael T. Lawton1, Mark C. Preul1. Virtual neurosurgery anatomy laboratory: A collaborative and remote education experience in the metaverse. 17-Mar-2023;14:90. Available from: https://surgicalneurologyint.com/?post_type=surgicalint_articles&p=12197

Abstract

Background: Advances in computer sciences, including novel 3-dimensional rendering techniques, have enabled the creation of cloud-based virtual reality (VR) interfaces, making real-time peer-to-peer interaction possible even from remote locations. This study addresses the potential use of this technology for microsurgery anatomy education.

Methods: Digital specimens were created using multiple photogrammetry techniques and imported into a virtual simulated neuroanatomy dissection laboratory. A VR educational program using a multiuser virtual anatomy laboratory experience was developed. Internal validation was performed by five multinational neurosurgery visiting scholars testing and assessing the digital VR models. For external validation, 20 neurosurgery residents tested and assessed the same models and virtual space.

Results: Each participant responded to 14 statements assessing the virtual models, categorized under realism (n = 3), usefulness (n = 2), practicality (n = 3), enjoyment (n = 3), and recommendation (n = 3). Most responses expressed agreement or strong agreement with the assessment statements (internal validation, 94% [66/70] total responses; external validation, 91.4% [256/280] total responses). Notably, most participants strongly agreed that this system should be part of neurosurgery residency training and that virtual cadaver courses through this platform could be effective for education.

Conclusion: Cloud-based VR interfaces are a novel resource for neurosurgery education. Interactive and remote collaboration between instructors and trainees is possible in virtual environments using volumetric models created with photogrammetry. We believe that this technology could be part of a hybrid anatomy curriculum for neurosurgery education. More studies are needed to assess the educational value of this type of innovative educational resource.

Keywords: Microsurgical anatomy, Neuroanatomy, Photogrammetry, Surgical education, Virtual reality

INTRODUCTION

The neurosurgery research laboratory is the ideal environment for learning complex microsurgery anatomy and advanced surgical techniques necessary to treat challenging neurosurgical diseases.[

Advances in the computer sciences, such as detailed and realistic 3-dimensional (3D) rendering techniques, improved broadband connections, and virtual reality (VR) interfaces,[

The goal of our study was to create a virtual neurosurgery anatomy learning environment in the metaverse that could enable meaningful collaborative participation among neurosurgery trainees and instructors, even when those individuals were in different distant locations. We therefore developed detailed volumetric and immersive 3D models reconstructed from cadaveric specimens and dissections, with the goal of improving neurosurgery training and education.

MATERIALS AND METHODS

An immersive, multiuser, and interactive virtual environment for microsurgery anatomy education was designed using Spatial (Spatial Systems, Inc., Emeryville, CA), a cloud-based computer interface that allows a personalized virtual space to be created where digital objects can be exhibited, which we used to simulate a neurosurgery anatomy dissection laboratory. In addition, this platform permitted real-time collaboration and cloud storage of files (including 3D models, videos, and images). This virtual space was viewed using standalone VR headsets for a more immersive experience; however, the environmental interaction could be performed and displayed in a 2-dimensional (2D) format using a web browser on traditional laptops, tablets, and smartphones.

A database of volumetric anatomy renderings created from cadaveric dissections was developed using photogrammetry, a technique that allows 3D models to be created from high-resolution photographs.[

Anatomy surface rendering using photogrammetry

Photogrammetry[

Photogrammetry techniques for image processing

Photogrammetry techniques used to process images included 360° photogrammetry using Qlone (EyeCue Vision Technologies, Ltd., Yokneam, Israel), stereoscopic photogrammetry, and monoscopic photogrammetry through machine learning.

360° photogrammetry with Qlone

Qlone software was used to create 3D renderings of anatomical dissection specimens using a 360° scanning process performed with the camera of a tablet device (iPad Pro 12.9-inch, Apple, Inc., Cupertino, CA). This software allows multiple images of an anatomical specimen to be captured in a circumferential fashion to create a volumetric model. Qlone is especially useful for creating 3D models of macroscopic anatomy (e.g., the whole brain and brainstem).[

Stereoscopic photogrammetry

Stereoscopic images from the Rhoton Collection[

Monoscopic photogrammetry via machine learning

High-resolution microsurgery photographs of cadaveric neurosurgery approach dissections were used as inputs for a neural network capable of monocular depth estimation, such as MiDaS (Intel Intelligent Systems Lab, Intel Corporation, Santa Clara, CA).[

All 3D reconstructions were performed using a laptop (MacBook Pro, Apple, Inc.) with a 2.5-GHz quad-core Intel Core i7 processor and a dedicated graphics card (NVIDIA GeForce GT 750M; Nvidia Corp., Santa Clara, CA). The 3D scanning was performed using the Qlone app (Qlone 2017-2022, EyeCue Vision Technologies, Ltd.,) on an 11-inch second-generation iPad Pro (Apple, Inc.).

Educational program

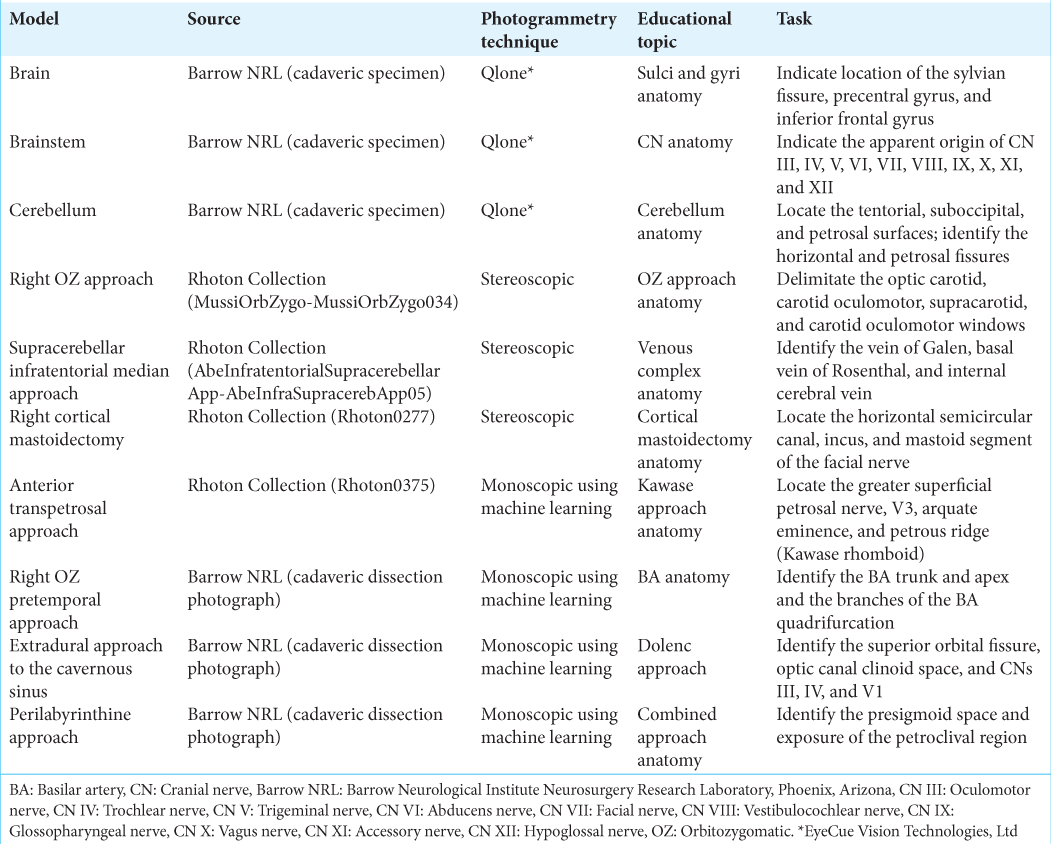

Ten digital 3D-reconstructed anatomical models [

Under direct supervision, all study participants at both geographically distant neurosurgical centers were asked to complete learning and task objectives associated with each of the 10 models to the best of their abilities [

VR testing

All participants in the study used the Oculus Quest 2 standalone VR headset (Meta Platforms, Inc., Menlo Park, CA). This standalone system does not need to be plugged into a computer or power source and offers stereoscopic visualization and depth perception through binocular lenses placed inside the headset. Internet connectivity allows access to cloud-based VR content including immersive environments. The Oculus Quest 2 VR system includes two wireless controllers that are trackable by a camera located at the front of the headset. The controllers feature haptic feedback, and user interaction is accomplished through action buttons, thumb-sticks, and two analog triggers (one for the index finger and the other for the thumb). After the Spatial application was installed on the headset, a virtual space was created, and the 3D objects and models were imported.

Internal and external validation with neurosurgery visiting scholars and trainees

Preliminary testing and system optimization, including model selection and educational methodology, were performed by two trained neurosurgeons at the neurosurgical research laboratory at Barrow Neurological Institute. Internal validation of the developed virtual experience was performed by five multinational neurosurgery department visiting scholars (one each from China, Taiwan, Spain, El Salvador, and the United States; five males; three neurosurgery residents, one third-year medical student, and one fully trained neurosurgeon). The scholars tested the metaverse platform and interacted with the 3D environments. Within the virtual space, participants were given access to various 3D anatomical models, some of which were digitally reconstructed images of common neurosurgical approaches. They were allowed to ask questions about the features and contents of each model. The types of questions they asked included the following: What is the following structure? What is the best perspective to view a given structure? How was the head positioned? What structures are being retracted? Where are the anterior, posterior, medial, and lateral planes? In addition, the observers were allowed to manipulate their view (i.e., magnify, demagnify, rotate, and change position) within the anatomical environments. Afterward, participants were asked to complete a qualitative assessment addressing the following categories: Realism, practicality, usefulness, enjoyment, and recommendation. Responses to 14 statements were scored as follows: Strongly disagree = 1, disagree = 2, neutral = 3, agree = 4, or strongly agree = 5.

For external validation, 20 neurosurgery residents (15 men and five women) at Hacettepe University in Ankara, Turkey, participated in the educational activity. The residents were given access to the metaverse meeting space for a virtual neurosurgery anatomy course. The course was instructed by a senior resident trained on the use of the virtual platform. Residents were allowed to ask questions, interact with each other in real-time within the metaverse and manipulate the anatomical virtual environment as necessary.

Demographic characteristics, such as level of training, sex, and previous neurosurgery anatomy (i.e., cadaveric) dissection experience, were recorded for both residents at Hacettepe University and visiting scholars at Barrow Neurological Institute.

Statistical analysis

Microsoft Excel 16.6.1.1 (Microsoft Corp., Redmond, WA) was used for descriptive statistics. Percentage means were determined for the demographic characteristics of participants and the qualitative distribution of their responses. Tableau Software 2022.1.2 (Tableau Software, LLC, Seattle, WA) was used for the graphical representation of the distribution of responses of qualitative assessment.

RESULTS

A virtual meeting space was successfully created and 10 immersive anatomy environment models were developed using multiple photogrammetry techniques [

Figure 1:

Ten 3-dimensional (3D) neurosurgery anatomical models created with photogrammetry techniques. Qlone (EyeCue Vision Technologies, Ltd., Agoura Hills, CA) was used to create 3D models of the (a) brain surface, (b) brainstem, and (c) cerebellum. Stereoscopic photogrammetry was used to transform images from the Rhoton Collection into four immersive anatomical models: (d) the right orbitozygomatic (OZ) approach, (e) the supracerebellar infratentorial median approach, (f) the right cortical mastoidectomy, and (g) the anterior transpetrosal approach. Monoscopic photogrammetry using machine learning was used to create 3 models of (h) the right OZ pretemporal approach, (i) the extradural approach to the cavernous sinus, and (j) the perilabyrinthine approach. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

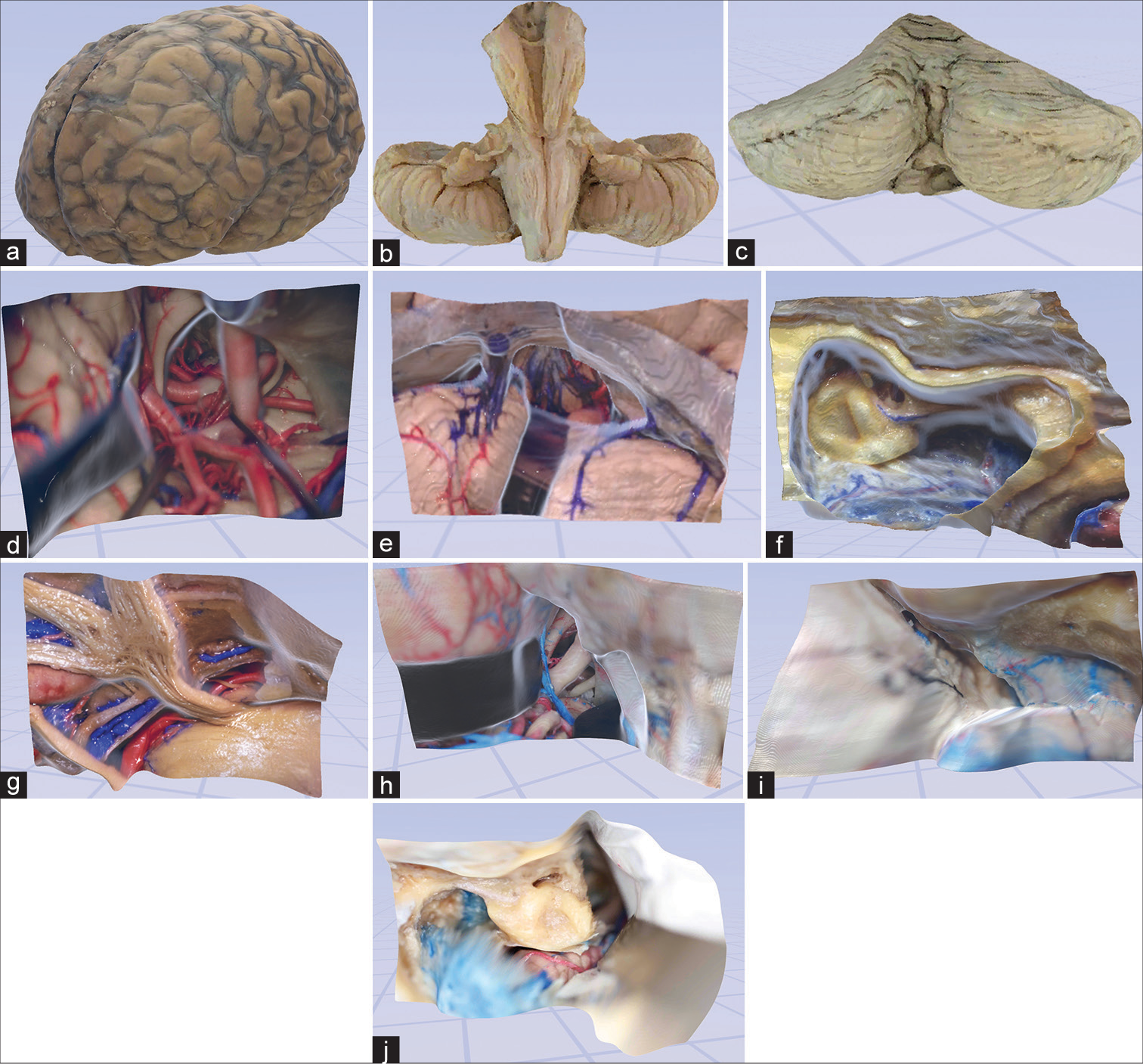

Figure 2:

Examples of user experience in virtual space. (a) The third-person view of a user interacting with the digital objects in the virtual space. (b) An avatar with lifelike physical features is automatically created using a webcam. (c and d) Examples of virtual space allowing real-time collaboration and interaction with 3-dimensional objects and models. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Users of the VR space could change the position of the 3D models by pointing the controller and simultaneously pressing the index finger analog controller button. Manipulations, rotations, and motions of the digital model were performed according to the desire of each participant by adjusting the orientation of the controller. Scale adjustments of the 3D models (zoom in and out) were performed as follows: An increase in magnification was produced by pressing the index analog controller button while moving both controllers simultaneously using an outside direction. A decrease of magnification was accomplished by moving the controllers inward while pressing the index analog button.

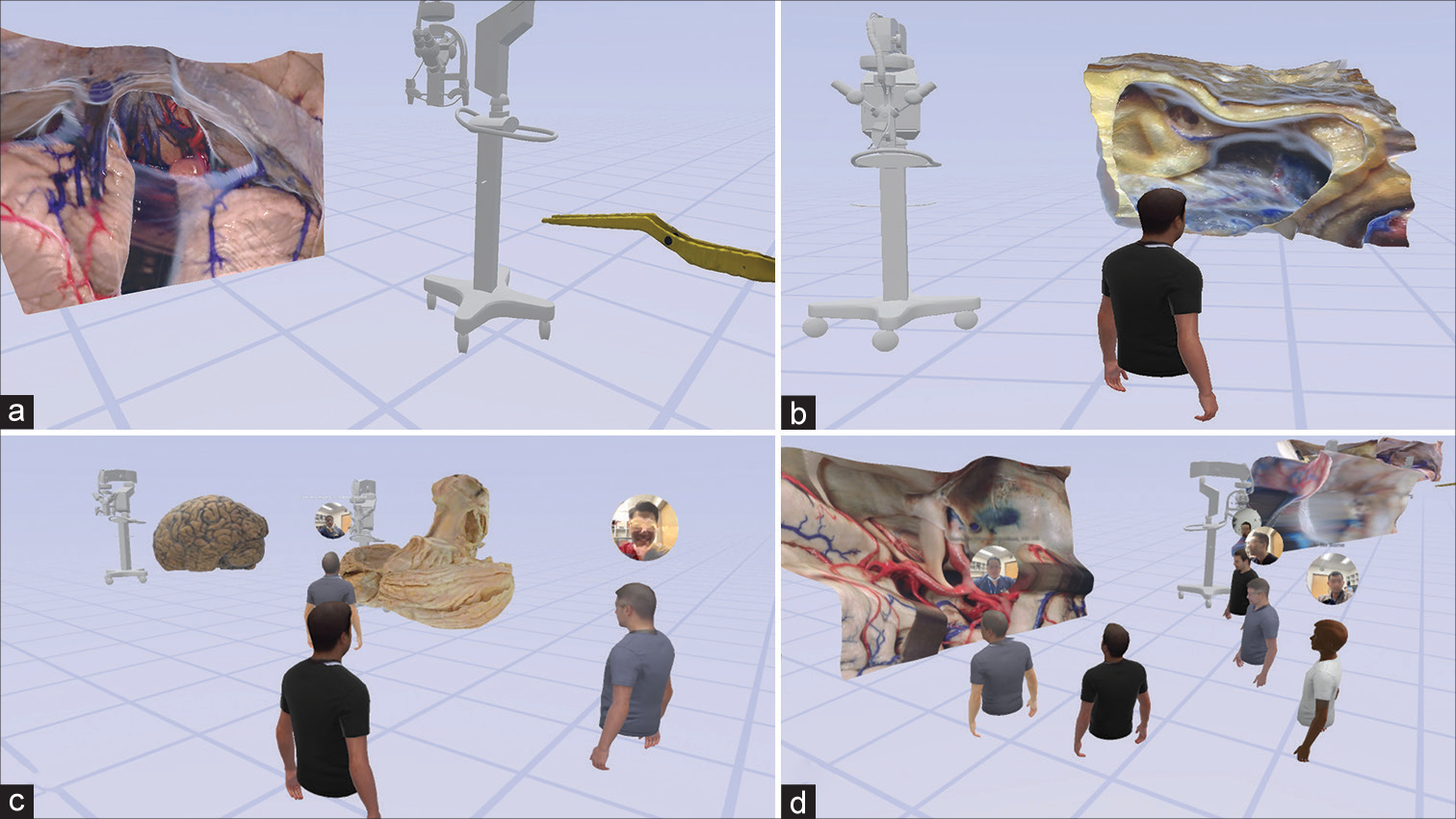

The depth of anatomical structures relative to one another was more easily perceived using VR headsets because the head-tracking feature allowed natural and fluid assessment of different neurosurgical perspectives. However, the use of a 2D display, such as a laptop, tablet, or smartphone, enabled the sense of perception through the model’s movement, like the parallax phenomenon. Examples of neurosurgical approach dissections and participant interactions within the metaverse are shown in

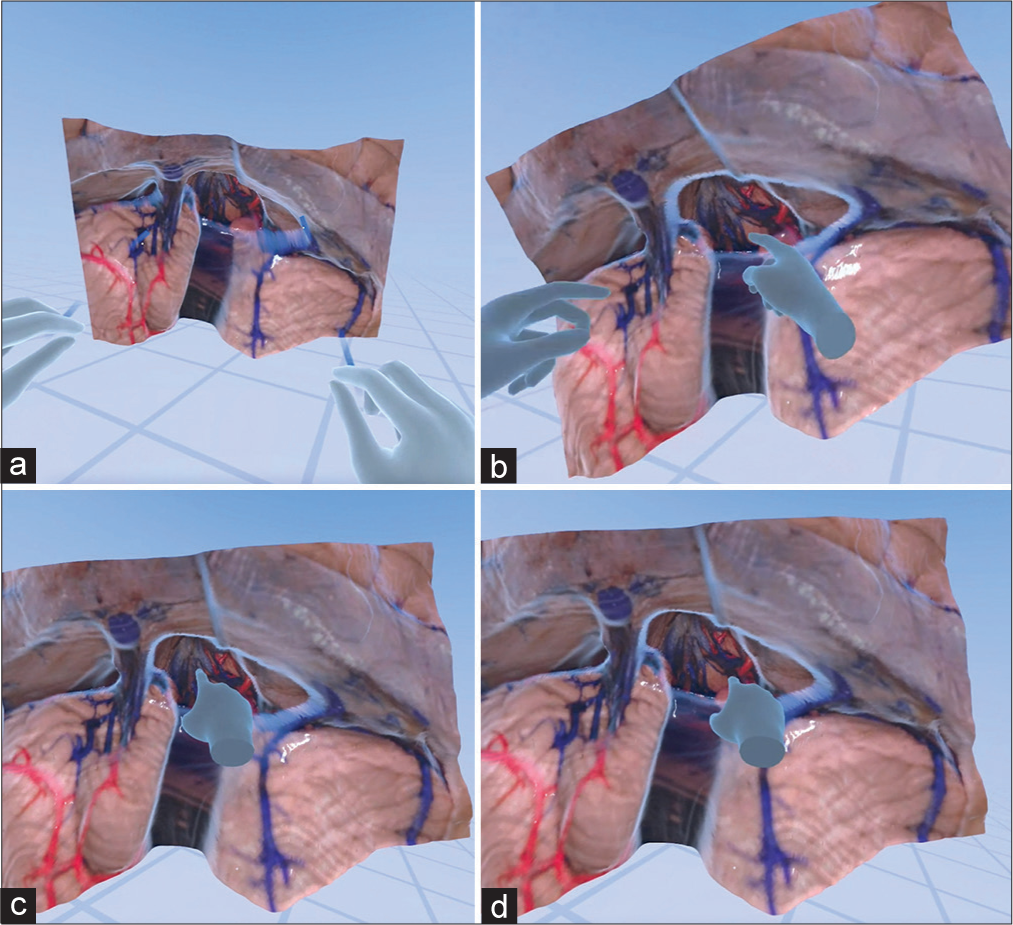

Figure 3:

Virtual reality interaction with a monoscopic photogrammetry model of a right-sided orbitozygomatic pretemporal approach. Using a virtual reality headset and controllers, the surgical trainee is asked to position the model in surgical position and to point out anatomical structures and surgical windows to the basilar artery. (a) The model is observed in the virtual reality space. (b) A surgical trainee, using a virtual reality headset and controllers, interacts with the model to increase the scale of the model. (c) The student signals the localization of the right oculomotor nerve by pointing with the controller. The trainee correctly identifies the optic nerve, supraclinoid internal carotid artery, and oculomotor nerve. (d) The surgical trainee is asked to show the different windows to the basilar artery. At this step, feedback is provided by the instructor regarding the different pathways to the basilar artery through the optic carotid, carotid-oculomotor, and oculomotor-tentorial windows. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

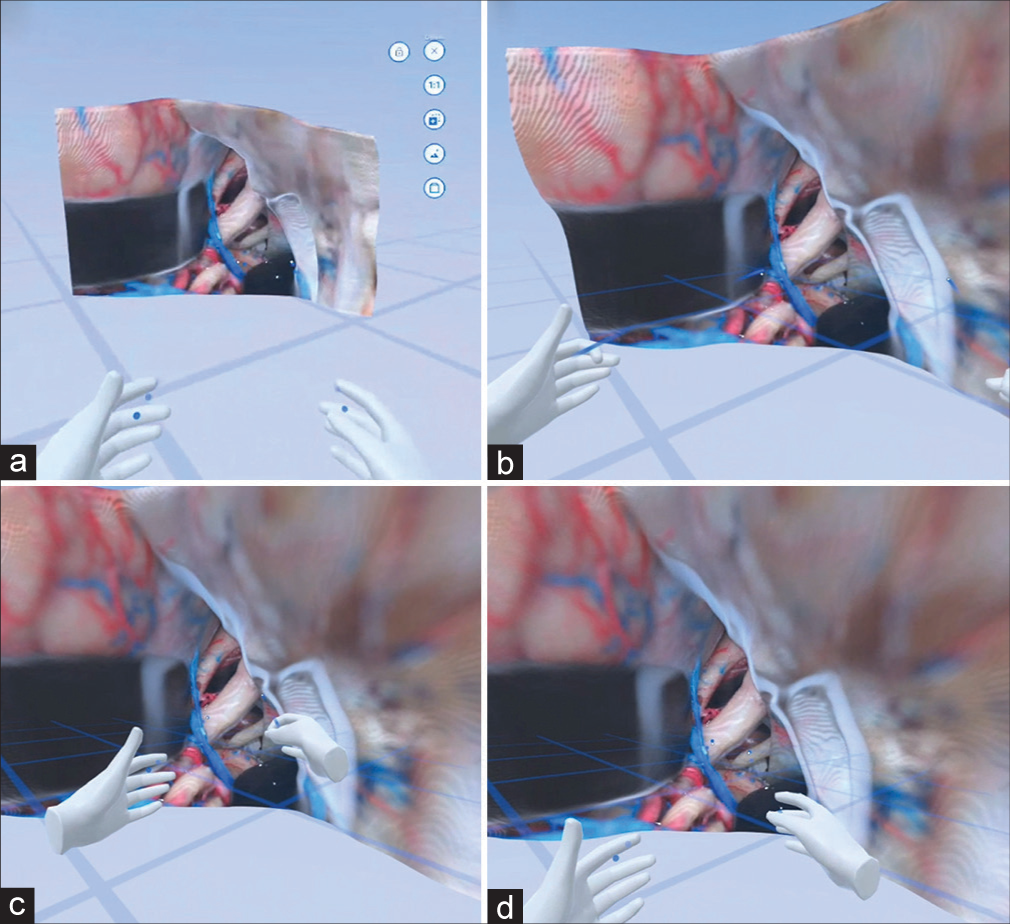

Figure 4:

Virtual reality interaction with a monoscopic photogrammetry model of the supracerebellar infratentorial approach. In this educational excursive, emphasis was placed on the relevant venous anatomy exposed by this approach. (a) The model is observed in the cloud meeting space. (b) A trainee, who is using a virtual reality headset, signals the localization of the vein of Galen, basal vein of Rosenthal, and internal cerebral veins. (c) The student shows the localization of the internal cerebral veins. (d) The trainee points to the basal vein of Rosenthal. The instructor gives feedback regarding the different perspectives of the median, paramedian, and extreme lateral variants of this approach. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Internal validation

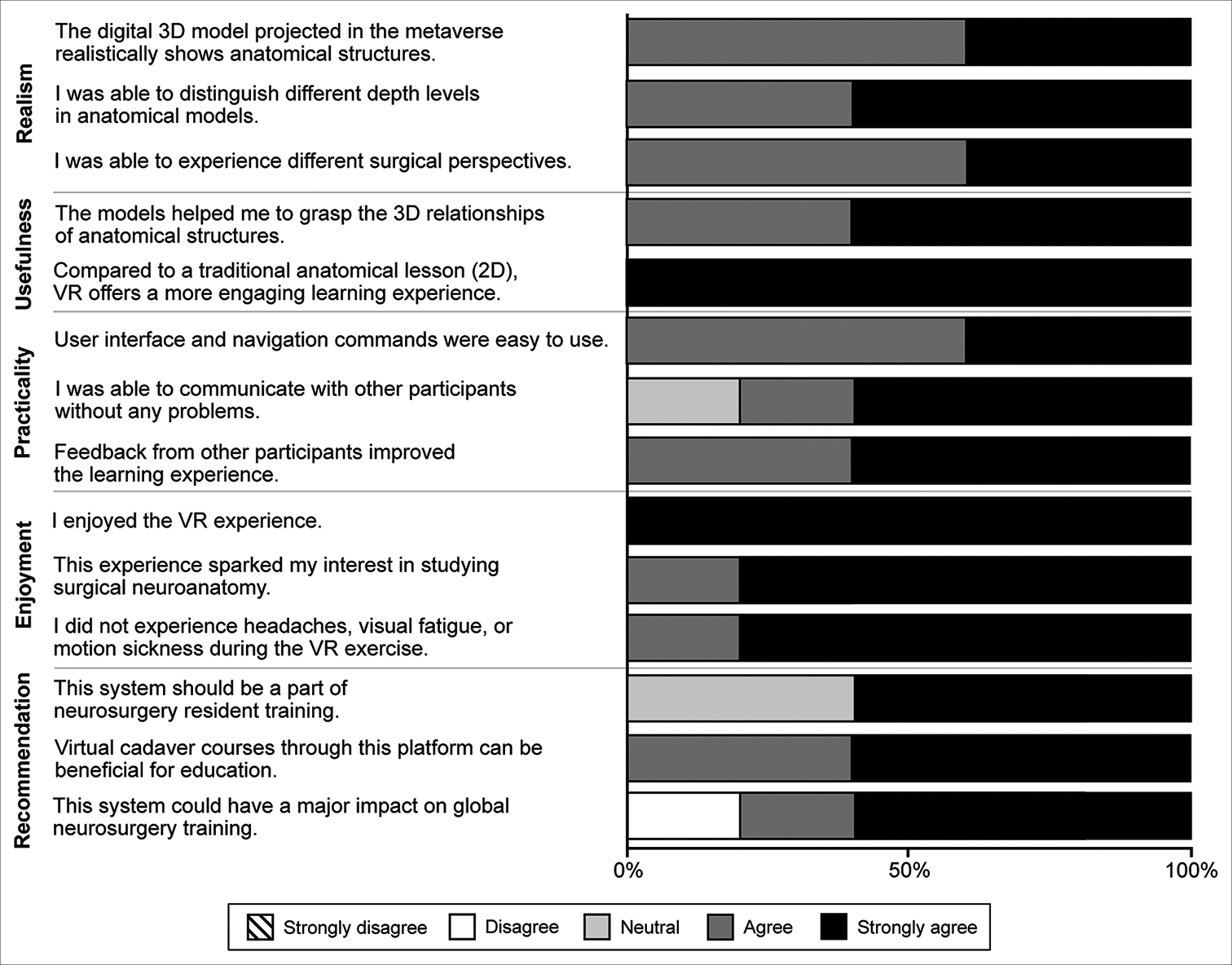

All neurosurgery service visiting scholars at Barrow Neurological Institute completed the qualitative assessment, yielding 70 responses to the 14 statements. Sixty-six (94%) responses indicated agreement or strong agreement with the assessment statements [

Figure 5:

Qualitative assessments of the virtual cranial base dissection laboratory in the metaverse by 5 multinational neurosurgery fellows conducting observerships at Barrow Neurological Institute (Phoenix, Arizona). VR: Virtual reality, 2D: 2-dimensional, 3D: 3-dimensional. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

External validation

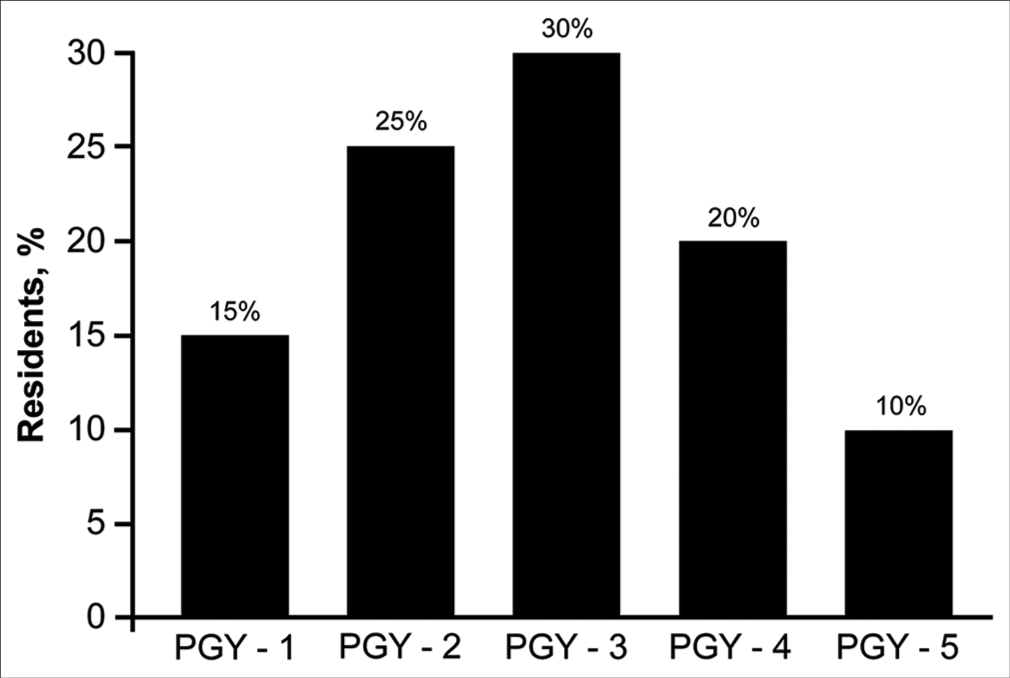

The 20 neurosurgery residents at Hacettepe University who joined the virtual neurosurgery anatomy course also completed the 14-statement qualitative assessment after the course, yielding 280 responses. Thirteen (65%) residents reported that they had no prior neurosurgery anatomical dissection laboratory experience (i.e., they had not utilized cadaveric tissue). The program years of the 20 residents are summarized in

Figure 6:

PGY of the 20 neurosurgery residents in the Department of Neurosurgery at Hacettepe University, who served as a source of external validation. Most the 20 residents were in their second, third, or fourth year of residency. Used with permission from Barrow Neurological Institute, Phoenix, Arizona. PGY: Program year.

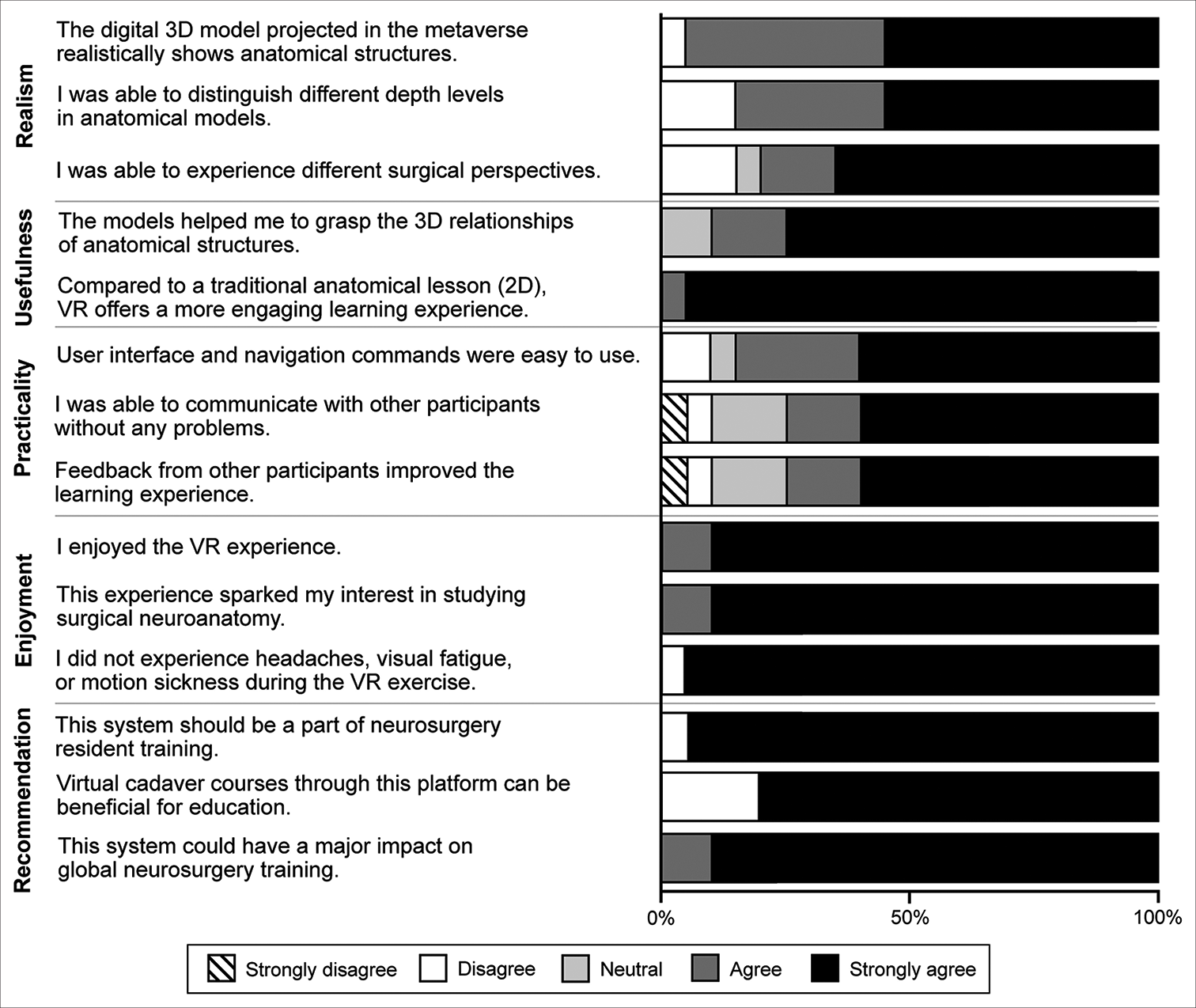

Figure 7:

Qualitative assessments of the virtual cranial base dissection laboratory in the metaverse by 20 neurosurgery residents in the Department of Neurosurgery at Hacettepe University. VR: Virtual reality, 2D: 2-dimensional, 3D: 3-dimensional. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Nineteen (95%) residents strongly agreed that this system should be part of neurosurgery residency training and could have a major impact on global neurosurgery training. Nineteen (95%) residents also strongly agreed that, compared to traditional 2D anatomical lessons, VR offers a more engaging learning experience. All 20 of the neurosurgery residents at Hacettepe University commented that they appreciated the opportunity to use the virtual system and would like to have such technology implemented in the future courses.

DISCUSSION

In this pilot study, a virtual meeting space was developed for multiuser neurosurgery training purposes using photogrammetry-developed immersive anatomy models with predefined learning objectives [

3D rendering of neurosurgical anatomy

Pioneering work on the development of stereoscopic interactive anatomical simulations was performed almost 15 years ago with the use of a robotic microscope for sequential image acquisitions.[

The technology used in our study can be obtained relatively easily and inexpensively, and the procedures are not technically demanding, except for the anatomical dissections themselves, which for best effect should be exquisitely performed on cadaveric tissue of excellent quality. We used the immersive anatomy model environments developed with various forms of photogrammetry, including a novel technique based on the use of machine learning for the 3D rendering of monoscopic images (i.e., monoscopic photogrammetry).[

Advantages of virtual space and system

Although the study was conducted remotely, real-time interaction of users with volumetric anatomical models could be supervised with immediate feedback from senior neurosurgery staff members. In our opinion, interpersonal collaboration is an important aspect of a meaningful educational experience, especially for teaching and learning complex microneurosurgical anatomy or unfamiliar neurosurgical approaches. Integrated and multimodal computerized teaching methods have been suggested as an alternative to traditional cadaver dissection.[

As exemplified by the results of this study, active learning with 3D anatomical models involving multiple regions around the world has been made possible because of advances in communication technology. International participation and sharing of knowledge in virtual environments using “hands-on” interactions with immersive anatomical models can be a way to narrow the gap in practical surgical training in resource-limited settings. In our study, a metaverse of a virtual neuroanatomy laboratory space was created to serve as a hub of neurosurgery anatomical resources. Other potential uses for this technology include expert analysis of patient-specific neuroimaging studies with volumetric 3D renderings, a virtual exhibit of new surgical instrumentation, practical applications for academic meetings with remote users, and even medicolegal situations. Further implementation of unsupervised measurement of performance, with assessment of technical skills or performance progression using machine learning methods, could be the next step for virtual multiuser practical simulations.[

Limitations

Limitations of this study include the small sample size of the virtual models and participants. Furthermore, although our photogrammetry models were highly detailed, they are static and lack haptic feedback. Thus, our 3D reconstruction techniques require further refinement. Finally, our study lacked performance metrics to evaluate the educational benefits of this approach. A performance grading system will be pursued in future studies.

CONCLUSION

Virtual interaction and collaboration between neurosurgery trainees and instructors are possible in the metaverse, even from locations on opposite sides of the world. Immersive models can be created from cadaveric dissection specimens using photogrammetry and demonstrated in virtual anatomy cloud spaces. Our results demonstrate the possible utility of such technology for augmenting neurosurgery anatomy training, simulating surgery, and gaining familiarity with surgical approaches. Incorporating such virtual educational experiences into a traditional cadaveric dissection curriculum could improve the learning of anatomy by surgical trainees.

Declaration of patient consent

Patient’s consent not required as there are no patients in this study.

Financial support and sponsorship

Newsome Chair of Neurosurgery Research held by Dr. Mark Preul and the Barrow Neurological Foundation.

Conflicts of interest

There are no conflicts of interest.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of the Journal or its management. The information contained in this article should not be considered to be medical advice; patients should consult their own physicians for advice as to their specific medical needs.

Acknowledgments

We thank the staff of Neuroscience Publications at Barrow Neurological Institute for assistance with manuscript preparation.

References

1. Alaraj A, Luciano CJ, Bailey DP, Elsenousi A, Roitberg BZ, Bernardo A. Virtual reality cerebral aneurysm clipping simulation with real-time haptic feedback. Neurosurgery. 2015. 11: 52-8

2. Alharbi Y, Al-Mansour M, Al-Saffar R, Garman A, Alraddadi A. Three-dimensional virtual reality as an innovative teaching and learning tool for human anatomy courses in medical education: A mixed methods study. Cureus. 2020. 12: e7085

3. Alkadri S, Ledwos N, Mirchi N, Reich A, Yilmaz R, Driscoll M. Utilizing a multilayer perceptron artificial neural network to assess a virtual reality surgical procedure. Comput Biol Med. 2021. 136: 104770

4. Balogh A, Preul MC, Schornak M, Hickman M, Spetzler RF. Intraoperative stereoscopic QuickTime Virtual Reality. J Neurosurg. 2004. 100: 591-6

5. Balogh AA, Preul MC, Laszlo K, Schornak M, Hickman M, Deshmukh P. Multilayer image grid reconstruction technology: Four-dimensional interactive image reconstruction of microsurgical neuroanatomic dissections. Neurosurgery. 2006. 58: ONS157-65

6. Bernardo A. Virtual reality and simulation in neurosurgical training. World Neurosurg. 2017. 106: 1015-29

7. Chawla S, Devi S, Calvachi P, Gormley WB, Rueda-Esteban R. Evaluation of simulation models in neurosurgical training according to face, content, and construct validity: a systematic review. Acta Neurochir (Wien). 2022. 164: 947-66

8. De Benedictis A, Nocerino E, Menna F, Remondino F, Barbareschi M, Rozzanigo U. Photogrammetry of the human brain: A novel method for three-dimensional quantitative exploration of the structural connectivity in neurosurgery and neurosciences. World Neurosurg. 2018. 115: e279-91

9. Estai M, Bunt S. Best teaching practices in anatomy education: A critical review. Ann Anat. 2016. 208: 151-7

10. Farhadi DS, Jubran JH, Zhao X, Houlihan LM, Belykh E, Meybodi AT. The neuroanatomic studies of Albert L. Rhoton Jr, in historical context: An analysis of origin, evolution, and application. World Neurosurg. 2021. 151: 258-76

11. Gelinas-Phaneuf N, Choudhury N, Al-Habib AR, Cabral A, Nadeau E, Mora V. Assessing performance in brain tumor resection using a novel virtual reality simulator. Int J Comput Assist Radiol Surg. 2014. 9: 1-9

12. Gonzalez-Romo NI, Hanalioglu S, Mignucci-Jiménez G, Abramov I, Xu Y, Preul MC. Anatomical depth estimation and three-dimensional reconstruction of microsurgical anatomy using monoscopic high-definition photogrammetry and machine learning. Oper Neurosurg (Hagerstown). 2022. p.

13. Gurses ME, Gungor A, Hanalioglu S, Yaltirik CK, Postuk HC, Berker M. Qlone(R): A simple method to create 360-degree photogrammetry-based 3-dimensional model of cadaveric specimens. Oper Neurosurg (Hagerstown). 2021. 21: E488-93

14. Hanalioglu S, Romo NG, Mignucci-Jimenez G, Tunc O, Gurses ME, Abramov I. Development and validation of a novel methodological pipeline to integrate neuroimaging and photogrammetry for immersive 3D cadaveric neurosurgical simulation. Front Surg. 2022. 9: 878378

15. Henn JS, Lemole GM, Ferreira MA, Gonzalez LF, Schornak M, Preul MC. Interactive stereoscopic virtual reality: A new tool for neurosurgical education. Technical note. J Neurosurg. 2002. 96: 144-9

16. Kirkman MA, Ahmed M, Albert AF, Wilson MH, Nandi D, Sevdalis N. The use of simulation in neurosurgical education and training. A systematic review. J Neurosurg. 2014. 121: 228-46

17. Kye B, Han N, Kim E, Park Y, Jo S. Educational applications of metaverse: Possibilities and limitations. J Educ Eval Health Prof. 2021. 18: 32

18. Petriceks AH, Peterson AS, Angeles M, Brown WP, Srivastava S. Photogrammetry of human specimens: An innovation in anatomy education. J Med Educ Curric Dev. 2018. 5: 2382120518799356

19. Ranftl R, Bochkovskiy A, Koltun V. Vision transformers for dense prediction. ArXiv. 2013. 2103: 13413

20. Ranftl R, Lasinger K, Hafner D, Schindler K, Koltun V. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE Trans Pattern Anal Mach Intell. 2022. 44: 1623-37

21. Rehder R, Abd-El-Barr M, Hooten K, Weinstock P, Madsen JR, Cohen AR. The role of simulation in neurosurgery. Childs Nerv Syst. 2016. 32: 43-54

22. Schirmer CM, Elder JB, Roitberg B, Lobel DA. Virtual reality-based simulation training for ventriculostomy: An evidence-based approach. Neurosurgery. 2013. 73: 66-73

23. Sorenson J, Khan N, Couldwell W, Robertson J. The Rhoton Collection. World Neurosurg. 2016. 92: 649-52

24. Thali MJ, Braun M, Wirth J, Vock P, Dirnhofer R. 3D surface and body documentation in forensic medicine: 3-D/CAD photogrammetry merged with 3D radiological scanning. J Forensic Sci. 2003. 48: 1356-65

25. Tomlinson SB, Hendricks BK, Cohen-Gadol A. Immersive three-dimensional modeling and virtual reality for enhanced visualization of operative neurosurgical anatomy. World Neurosurg. 2019. 131: 313-20

26. Winkler-Schwartz A, Yilmaz R, Mirchi N, Bissonnette V, Ledwos N, Siyar S. Machine learning identification of surgical and operative factors associated with surgical expertise in virtual reality simulation. JAMA Netw Open. 2019. 2: e198363

27. Zhou K, Meng X, Cheng B. Review of stereo matching algorithms based on deep learning. Comput Intell Neurosci. 2020. 2020: 8562323

28. Zhou QY, Park J, Koltun V. Open3D: A modern library for 3D data processing. ArXiv. 2018. 1801: 09847