- Department of Neurosurgery, Loma Linda University Medical Center, Loma Linda, CA, United States.

- University of California Los Angeles, Los Angeles, CA, United States.

- Department of Neurosurgery, School of Medicine, State University of New York at Stony Brook, New York, United States.

Correspondence Address:

James I. Ausman

Department of Neurosurgery, Loma Linda University Medical Center, Loma Linda, CA, United States.

DOI:10.25259/SNI_199_2020

Copyright: © 2020 Surgical Neurology International This is an open-access article distributed under the terms of the Creative Commons Attribution-Non Commercial-Share Alike 4.0 License, which allows others to remix, tweak, and build upon the work non-commercially, as long as the author is credited and the new creations are licensed under the identical terms.How to cite this article: James I. Ausman1,2, Nancy Epstein3, James L. West1. Comparative metrics of neurosurgical scientific journals: What do they mean to readers?. 27-Jun-2020;11:169

How to cite this URL: James I. Ausman1,2, Nancy Epstein3, James L. West1. Comparative metrics of neurosurgical scientific journals: What do they mean to readers?. 27-Jun-2020;11:169. Available from: https://surgicalneurologyint.com/surgicalint-articles/comparative-metrics-of-neurosurgical-scientific-journals-what-do-they-mean-to-readers/

Abstract

Background: In regard to scientific information, are we effectively reaching the universe of physicians in the 21st century, all of whom have different backgrounds, practice environments, educational experiences, and varying degrees of research knowledge?

Methods: A comparison of the top nine neurosurgery journals based on various popular citation indices and also on the digital metric, Readers (Users)/month, was compiled from available metrics and from internet sources.

Results: Major differences in the ranking of the Readers (Users)/month metrics compared to ranking of the various citation indices were found. It is obvious that the citation indices do not measure the number of readers of a publication. Which metric should be used in judging the value of a scientific paper? The answer to that question relates to what the interest of the reader has in the scientific information. It appears that the academic scientist may have a different reason for reading a scientific publication than a physician caring for a patient.

Conclusions: There needs to be more than one type of metric that measures the value and “Impact” of a scientific paper based on how physicians learn.

Keywords: Citation indices, Comparison publication metrics, How do physicians learn? Readers (Users) month

INTRODUCTION: THE DEVELOPMENT OF SCIENTIFIC PUBLISHING

History of development of scientific journals

Wikipedia states, “The history of scientific journals dates from 1665, when the French Journal des sçavans and the English Philosophical Transactions of the Royal Society first began systematically publishing research results. Over a thousand, mostly ephemeral, journals were founded in the 18th century, and the number has increased rapidly since then.”[

The growth of journal publishing

From a paper written in 1990, “The number of scholarly journals in all fields (scientific and others) has risen from 70,000 to 108,590 over the past 20 years, according to the Bowker/ Ulrich’s database”…[

The business of scientific journals

Scientific papers are usually published by private publishing companies, which charge a fee for either a subscription to a journal or to obtain single papers. The scientific content is critically reviewed by reviewers, picked by the sponsoring organization or society. Based on those reviews, manuscripts are either accepted, revised, or rejected. The publishing houses provide the electronic systems for coordinating the Peer Review process and the mechanisms for publishing scientific papers. These publishers receive revenues for those services. In addition, the sponsoring societies or organizations also receive revenues from the publication of the manuscripts as part of the subscription prices and advertising sold.[

Beginning of Open (Free) Access to scientific papers for all

In the past, access to scientific articles was only possible for those who bought subscriptions to a journal or whose university, hospital, or medical center purchased the subscriptions for their associated physicians to use. Notably, others, who did not have this access, were prevented from reading these scientific papers unless they subscribed to the journals, which was costly to the reader. The more journals that a physician reads the higher the subscription costs, thus potentially limiting the number of journals a private user could read. The establishment of internet-based scientific journals allowed “Open (Free) Access” to all internet-based journals to everyone, everywhere, at no cost. With the internet, and Open (Free) Access publishing more journal information became universally available.

Open access publishing has since flourished

“Open Access” publishing has since flourished in the interim. Although it has taken many different forms, the predominant financial impact has been to shift costs from the readers, (from personal, institutional, or organizational subscriptions), to the authors for publishing their papers.[

Cost to publish in open access journals

The author costs to publish in open access journals range from 100 to 1000 of dollars.[

National Institutes of Health (NIH) demands open access by 2024: scientific information for all immediately and free

If the research performed comes from public funds through a government grant, the NIH believes that this information should be freely available to readers everywhere within 12 months of publication.

The NIH Public Access Policy implements Division F. Section 217 of PL 111-8 (Omnibus Appropriations Act, 2009). The law states:

“The Director of the “NIH” shall require in the current fiscal year and thereafter that all investigators funded by the NIH submit or have submitted for them to the National Library of Medicine’s PubMed Central an electronic version of their final, peer-reviewed manuscripts upon acceptance for publication, to be made publicly available no later than 12 months after the official date of publication: Provided, that the NIH shall implement the public access policy in a manner consistent with copyright law.”

How “open access” impacted the journal publishing business

The shift to internet open access publishing allowed more space to publish material than previously available in printed journals and represented competition to regular publishing houses in making more space available for communication of scientific information, at no additional cost for publication on the internet. To ease the financial burden of the loss of revenue on the publishers, a transitional plan to “Open Access” by 2024 was devised. “Plan S, due to begin in 2021, requires researchers funded by participating agencies to ensure that their papers are free to read on publication. To ease the transition, the plan allows authors to publish in a ’hybrid’ journal, with a mix of free and pay-walled content, but only if the publisher commits to shifting the journal to entirely open access by 2024.”[

Countries and large universities urge immediate publication from large publishing houses while demanding less charges

“Universities fear they could end up paying more to help their scientists publish their work than they do now for bulk subscriptions from the publishers.” Large journal users such as universities or hospitals, would not only be paying bulk subscription costs to access the number of journals they buy for their readers but also the added author costs for publication of scientific papers.[

INDEXING SYSTEMS REFLECTING CITATIONS OF PUBLISHED PAPERS

How do indexing systems work and how are they used?

Indexing systems were developed to relate the number of cited published papers over a defined time period to the total number of papers published by the journal in that same period. This figure was known as the “Citation Index.” These indices were used as a measure of the importance of a paper and of the journals in which those papers were published. However, these indices did not measure the number of readers of a paper. With the internet, the actual number of readers of a journal and the number of times an individual paper is read can be measured electronically. It appears that the “Citation Index” is no longer the sole determinant of a paper’s quality or impact. Rather, other measures are needed and already exist that also reflect interest in a publication, for example, the number of readers who read or download a paper.

The citation indices were used (a) to assess the number of times a paper was cited, but were also used and (b) in academic credentialing for promotion in academic ranks based in large part by the number and impact of scientific articles published. In addition, (c) public funding bodies often require the results of scientific research to be published in scientific journals that have high citation indices.[

How often are papers cited?

In the paper by Hamilton form 1990, he states, “Citations, according to the conventional wisdom, are the glue that binds a research paper to the body of knowledge in a particular field and a measure of the paper’s importance. So what fraction of the world’s vast scientific literature is cited at least once?”…[

Do the indexing systems reflect the interests of those who are searching for information on patient care? Or do these grading systems only reflect a subset of readers consisting of academics?

“To critics of the academic promotion system like the University of Michigan President James Duderstadt, the growing number of journals and the high number of uncited articles simply confirm their suspicion that academic culture encourages spurious publication” Duderstadt stated, “It is pretty strong evidence of how fragmented scientific work has become, and … the kinds of pressures which drive people to stress the number of publications rather than quality of publications.” Duderstadt said that most of that pressure is rooted in the struggle for grants and promotions. “The obvious interpretation is that the “Publish or Perish” syndrome is still operating in force,” said David Helfand, chairman of the Astronomy Department at Columbia University.[

Who reads a scientific paper and why?

The research scientist looks for papers that are related to his/ her research interests and will use those papers in his/her scientific reporting. The clinician-scientist looks at articles for information that will affect his/her practice but that he or she may not necessarily use in publishing a paper. Does that distinction make any article less important or have less “impact?” What, therefore, is the proper metric to be used to evaluate a manuscript’s value to the reader? And does the grading system bias the selection against articles considered less important to bolster the journal’s perceived citation grade? Should scholarly productivity for clinicians be measured using similar strategies as are used in basic science research or the life sciences? In other disciplines, “research for research’s sake” is acceptable, but in medicine, research should always have the end goal of patient care and translation to the bedside.

How do physicians learn?

This paper compares the metrics of the different citation indices with the data of Readers(Users)/Month as another measure of readership. Who reads medical journals and Why? How do physicians learn? The purpose of this paper is to provide data and metrics on some of the most common neurosurgical journals that are published, so the reader can decide the relative values of each journal for his or her needs and to provide a focus on the system of evaluation of scientific publications.

METHODS

List of neurosurgical journals and request for metrics

A list of the neurosurgical journals was obtained from Google Scholar and other citation databases. The Google ranking system for 20 neurosurgical journals was used as the basis for the listing of journals. The website of each journal was examined to provide the metrics sought in this paper. Those metrics included the publishing organization, the journal title, the number of Readers(Users)/Month, the Google Citation index factor mean and median,[

Entries for cited journals verified by managing editors or editors

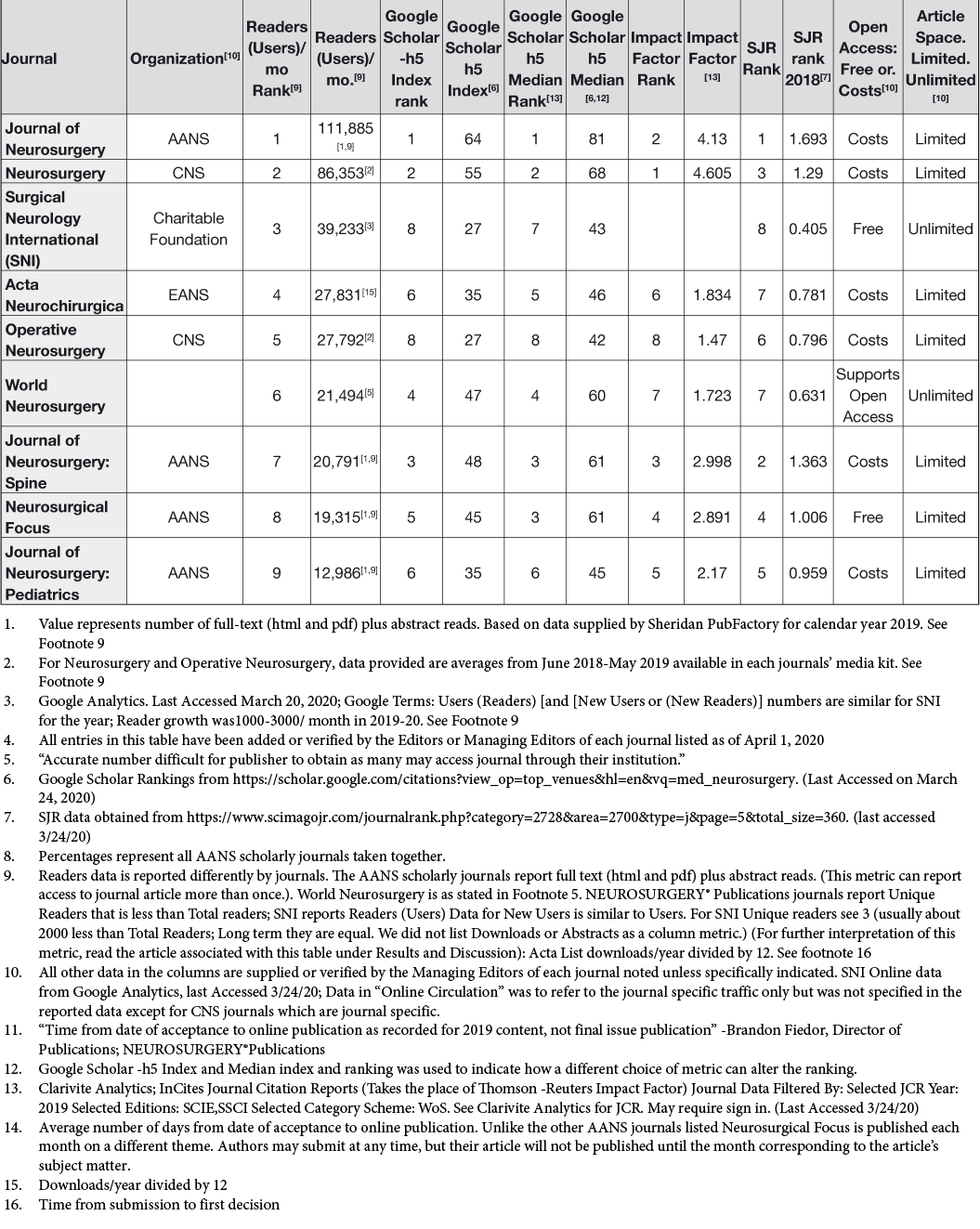

Table 1A:

Neurosurgery journals metrics comparisons as of April 19, 2020.[

Table 1B:

Neurosurgery journals metrics comparisons as of April 19, 2020.[

RESULTS AND DISCUSSION

Readers (Users) per month is not a standardized metric

It is immediately evident that the ranking of the journals varies depending on the metric used to evaluate them. We asked for the number of Readers (Users) each month from the listed journals’ editors. Various answers were given including: the full text downloads, pdf files/month, downloads per year/12, total Readers/month, html versions, or new Readers (Users)/month. Others used different metrics. Each journal reported the Users/Readers/month metric in their own way as is indicated in their footnotes to

Three major indexing systems: Google Scholar, Impact Factor, and SJR had different results for specific journals.

Indexing systems have been used as a measure of how many citations a journal received for its publication. However, with the introduction of the internet, actual data can now be determined regarding the number of Readers(Users)/Month, Total readers of specific papers, downloads, and locations of readers, as used in this table.

“How do we rank the importance of a journal or the journal’s value to its readers?”

Before the Internet

Given these different types of information, the question then becomes, “How do we rank the importance of a journal’s value to its readers?” The answer depends on what the reader is looking for in the journals they read. Before the internet, the citation indices, used by the academic community, were based on the number of citations a journal received. There was no way to know how many people read the journal or a paper from these Indices. Second, it was impossible to determine from the indexing systems the readers who read the paper but did not use it as a citation in a paper they may write.

After the internet

After the internet, there was an opportunity to learn exactly how many people accessed a specific journal or its articles. Furthermore, there were more journals published from which needed information could be obtained by readers. For example, those looking to publish a paper would likely be interested in publishing it in a journal with a higher citation index, as well as with a faster publication time. So, publication time becomes a metric in which readers are interested. Hence, which citation index should be used? Is it more important to know that an article is read by large numbers of people or by its citation index factor which does not measure the number of readers? If an article is read by thousands of readers even though it is not cited by another research publication, does it have less value as a publication? How do we measure that value? We do not know.

The citation indices/metrics do not truly reflect the actual number who read the paper

New metrics being considered

Academic medical centers are also re-evaluating the way, the influence of scholarly work is measured. Several institutions across the country have recognized that in the digital age, the transmission of scientific knowledge is not fully appreciated when only journal citations are considered. These academic centers have started to integrate alternative metrics (altmetrics) into their assessment of the impact of scientific manuscripts, and even in their considerations for tenure. Previous authors have proposed guidelines and recommendations for the inclusion of altmetrics into the assessment of academic productivity that incorporate social media views, downloads, and followers into evaluating an academician’s overall scholarship.[

In the transmission of information, what do our metrics mean?

Hence, what do our metrics mean? The deeper meaning of

Are case reports anecdotes or are they scientific evaluations of unusual observations in clinical practice?

As an example, are case reports “anecdotes,” a word commonly used in scientific discussions about these scientific reports? Merriam-Webster defines the word anecdote as “a usually short narrative of an interesting, amusing, or biographical incident,”[

In the coronavirus epidemic is not there a desperate need for information from case reports or small case series to propel larger studies of treatment choices? As the threat of death looms for patients, are not the case observations of great significance? Yet, the scientific community wants to wait for a vaccine to be developed or for a RCT to validate observations. Although this argument is ideal, it is not reasonable to the clinician fighting to save the life of a patient today when the ideal information is not available. People do not have years to wait for answers to near death problems as in RCTs. Is that the decision you would make for your family or patient? How do physicians learn?

In an unpublished survey of its readers, SNI found that physicians regarded, among its most valued sources of information: “Talking with Colleagues,” which was as important as reading abstracts, and which ranked higher than attendance at all types of meetings, podcasts, CME courses, and reading complete journals. How do we evaluate the metric, “Talking with Colleagues?” How do physicians learn?

How do physicians learn?

The fundamental question is, “How do physicians learn?” There is little information in the literature on this question. Are we effectively reaching the universe of physicians in the 21st century, all of whom have different backgrounds, practice environments, educational experiences, and varying degrees of research knowledge? Are our grading systems for judging scientific papers a valid way to measure the “impact” of these papers to most physicians? Are multiple metrics in order?

Fundamentally, are papers in scientific journals are being written for the benefit of the doctor authors and institutions or for the benefit of the patients or both? Our metrics of evaluation do not reflect such a distinction is being made.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of the Journal or its management.

Declaration of patient consent

Patient’s consent not required as patients identity is not disclosed or compromised.

Financial support and sponsership

This paper was supported by a donation to Surgical Neurology International from the James I. and Carolyn R. Educational Foundation.

Conflicts of interest

There are no conflicts of interest.

Acknowledgements

The Authors wish to thank the following people for their advice and comments on improving and adding data to this manuscript: Miguel A. Faria, M.D., is Associate Editor in Chief in Socioeconomics, Politics, Medicine, and World Affairs of Surgical Neurology International (SNI); Edward C. Benzel, MD. Editor in Chief, World Neurosurgery; Mike Cheley, Principal, Information Architect, Graphtek CMS, Inc. Palm Desert, CA. mcheley@graphtek.com; Jo Ann M Eliason, MA, ELS(D) Communications Manager JNS Publishing Group; Brandon J. Fiedor. Director of Publications NEUROSURGERY® Publications; Dennis Malkasian, PhD, MD, Neurosurgeon, Irvine California; Tiit Mathiesen, MD. Editor In Chief, Acta Neurochirurgia.

References

1. “Anecdote.” Merriam-Webster Dictionary. Available from: https://www.merriam-webster.com/dictionary/anecdote [Last accessed on 2020 Mar 25].

2. Brainerd J. China sours on publication metrics. Science. 2020. 367: 960

3. Brainerd J. Publishers Roll Out Alternative Routes to Open Access. Available from: https://www.sciencemag.org/news/2020/03/publishers-roll-out-alternative-routes-open-access [Last accessed on 2020 Mar 05].

4. Brainerd J. Scientific societies worry about threat from plan S. Science. 2019. 363: 332-3

5. Brainerd J. New deals could help scientific societies survive open access. Science. 2019. 365: 1229

6. Cabrera D, Vartabedian BS, Spinner RJ, Jordan BL, Aase LA, Timimi FK. More than likes and tweets: Creating social media portfolios for academic promotion and tenure. J Grad Med Educ. 2017. 9: 421-5

7. Clarivite Analytics: In Cites Journal Citation Reports (Takes the Place to Thomson-Reuters Impact Factors) Journal Data Filtered By: Selected JCR Year: 2019 Selected Editions: SCIE, SSCI Selected Category Scheme: WoS. See Clarivite Analytics for JCR: May Require Sign In.

8. Coccia CT, Ausman JI. Is a case report an anecdote? In defense of personal observations in medicine. Surg Neurol. 1987. 28: 111-3

9. Google Scholar Citation Indices Mean and Median: Google Scholar Citation Indices. Available from: https://www.scholar.google.com/citations?view_op=top_venues&hl=en&vq=med_neurosurgery [Last accessed on 2020 Mar 22].

10. Hamilton DP. Publishing by--and for?--the Numbers. Science. 1990. 250: 1331-2

11. Hiltzik M. In Act of Brinkmanship, a Big Publisher Cuts Off Access to its Academic Journals. Available from: https://www.latimes.com/business/hiltzik/la-fi-uc-elsevier-20190711-story.html [Last accessed on 2020 Mar 05].

12. Kaiser J. Half of all papers now free in some form, study claims. Science. 2013. 341: 830

13. Science contributors. Norway cancels elsevier. Science. 2019. 363: 1255

14. SJR Data. Available from: https://www.scimagojr.com/journalrank.php?category2728&area=2700&type=j&page-5&total_size=360 [Last accessed on 2020 Mar 24].

15. Thomson Reuters Impact Factors. Available from: https://www.researchgate.net/publication/322601291_Journal_Impact_Factor_2017-_Thomson_Reuters [Last accessed on 2020 Mar 05].

16. Wikipedia Contributors; Scientific Journal. Available from: https://en.wikipedia.org/w/index.php?title=Scientific_journal&oldid=945583311 [Last accessed on 2020 Mar 05].

Dr. Miguel A. Faria

Posted July 10, 2020, 11:02 am

A herculean job in compiling this data from so many journals, and an excellent contribution to online medical journalism.

Pr. Ali Akhaddar

Posted July 10, 2020, 2:00 pm

Thank you very much for these other aspects of medical literature and medical writing, rarely addressed by editors/publishers worldwide. We should also increasingly teach bibliometrics for young neurosurgeons: advantages and disadvantages !!

I wish you the best.