- School of Medicine, University of Pittsburgh, Pittsburgh, United States

- Department of Neurosurgery, University of Pittsburgh Medical Center, Pittsburgh, United States

- Department of Computing and Information, University of Pittsburgh, Pittsburgh, United States

- Department of Orthopaedic Surgery, University of Pittsburgh Medical Center, Pittsburgh, United States

- Department of Biology, Haverford College, Haverford, Pennsylvania, United States.

Correspondence Address:

Nikhil Sharma, School of Medicine, University of Pittsburgh, Pittsburgh, Pennsylvania, United States.

DOI:10.25259/SNI_167_2024

Copyright: © 2024 Surgical Neurology International This is an open-access article distributed under the terms of the Creative Commons Attribution-Non Commercial-Share Alike 4.0 License, which allows others to remix, transform, and build upon the work non-commercially, as long as the author is credited and the new creations are licensed under the identical terms.How to cite this article: Nikhil Sharma1, Arka N. Mallela2, Talha Khan3, Stephen Paul Canton4, Nicolas Matheo Kass1, Fritz Steuer1, Jacquelyn Jardini5, Jacob Biehl3, Edward G. Andrews2. Evolution of the meta-neurosurgeon: A systematic review of the current technical capabilities, limitations, and applications of augmented reality in neurosurgery. 26-Apr-2024;15:146

How to cite this URL: Nikhil Sharma1, Arka N. Mallela2, Talha Khan3, Stephen Paul Canton4, Nicolas Matheo Kass1, Fritz Steuer1, Jacquelyn Jardini5, Jacob Biehl3, Edward G. Andrews2. Evolution of the meta-neurosurgeon: A systematic review of the current technical capabilities, limitations, and applications of augmented reality in neurosurgery. 26-Apr-2024;15:146. Available from: https://surgicalneurologyint.com/surgicalint-articles/12870/

Abstract

Background: Augmented reality (AR) applications in neurosurgery have expanded over the past decade with the introduction of headset-based platforms. Many studies have focused on either preoperative planning to tailor the approach to the patient’s anatomy and pathology or intraoperative surgical navigation, primarily realized as AR navigation through microscope oculars. Additional efforts have been made to validate AR in trainee and patient education and to investigate novel surgical approaches. Our objective was to provide a systematic overview of AR in neurosurgery, provide current limitations of this technology, as well as highlight several applications of AR in neurosurgery.

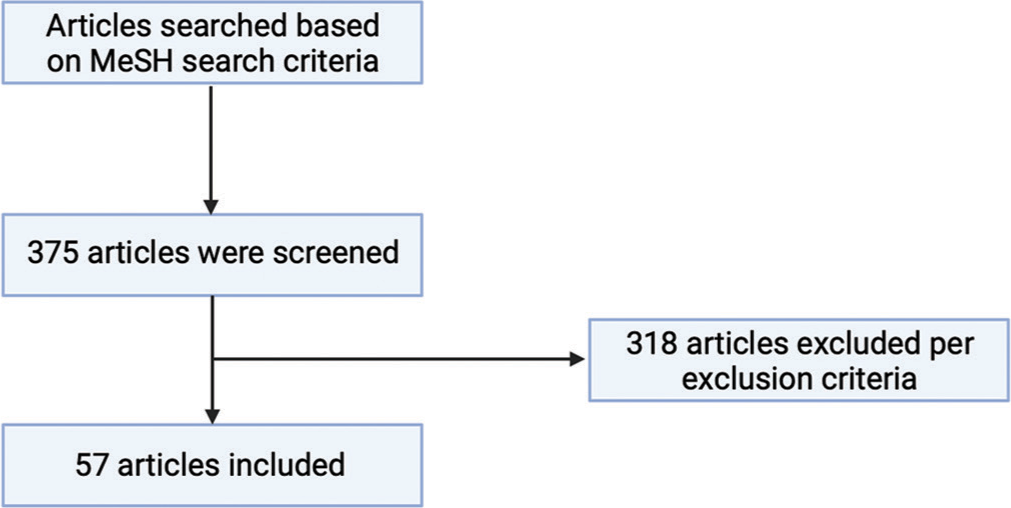

Methods: We performed a literature search in PubMed/Medline to identify papers that addressed the use of AR in neurosurgery. The authors screened three hundred and seventy-five papers, and 57 papers were selected, analyzed, and included in this systematic review.

Results: AR has made significant inroads in neurosurgery, particularly in neuronavigation. In spinal neurosurgery, this primarily has been used for pedicle screw placement. AR-based neuronavigation also has significant applications in cranial neurosurgery, including neurovascular, neurosurgical oncology, and skull base neurosurgery. Other potential applications include operating room streamlining, trainee and patient education, and telecommunications.

Conclusion: AR has already made a significant impact in neurosurgery in the above domains and has the potential to be a paradigm-altering technology. Future development in AR should focus on both validating these applications and extending the role of AR.

Keywords: Augmented reality, Education, Neurosurgery, Simultaneous localization and mapping (SLAM)

INTRODUCTION

Augmented reality (AR) has had increasing use within the medical community and has vast clinical potential. By providing surgeons with the ability to superimpose patient imaging directly onto the surgical field in real-time, AR permits multimodal synthesis of diverse patient data streams.

For this review, AR is defined as integrating interactive digital content within the user’s physical environment.[

Current literature contains several summaries of the applications of AR within the various sub-specialities of neurosurgery, but few, if any, elaborate on the current limitations and barriers to the seamless integration of AR into the operating room (OR). Here, our objective was to contextualize the rapid clinical development of AR in neurosurgery, summarize ongoing technological developments and challenges in AR, and discuss surgical and non-surgical applications of AR in neurosurgery.

METHODS

A literature search over the past 30 years was completed within PubMed/Medline to identify papers that addressed the use of neurosurgical AR. Inclusion criteria were as follows: manuscripts in which authors used AR/MR platforms, manuscripts in which the focus revolved around neurosurgery, and manuscripts written in English. Exclusion criteria were as follows: manuscripts where VR platforms were primarily used; manuscripts where full texts were not available; manuscripts which were not able to be obtained in English; and manuscripts which were only editorials/opinions pieces. The “MeSH” search strategies were as follows: “Augmented Reality” and “neurosurgery,” “surgical guidance,” “brain tumor surgery,” “neurovascular surgery,” “spinal surgery,” “neurosurgical education,” “patient education,” “surgical telecommunication,” and “surgical mentorship.” A total of 375 papers were screened. The authors of the current paper selected and reviewed 57 papers based on the criteria of relevance to AR, neurosurgery, and research/clinical validity.

Figure 1:

PRISMA flowchart of included articles. Figure 1 demonstrates the flowchart of screened and included articles in this review. Articles were searched based on the MeSH as mentioned above search criteria, yielding 375 total articles. Exclusion criteria (manuscripts where virtual reality platforms were primarily used; manuscripts where full texts were not available; manuscripts which were not able to be obtained in English; and manuscripts which were only editorials/opinions pieces) excluded 318 articles, which left 57 final articles used in this review. Flowchart created with BioRender.com.

Data pertaining to the applications of AR in neurosurgical guidance, training, patient education, and streaming were collected. The limited number of studies prevented the assessment of biases in this literature. The authors followed the PRISMA guidelines;[

RESULTS

Technical overview

AR platforms are typically composed of three essential components: the tracking system, computer processing system (CPS), and display.[

Image preparation

In the context of navigation, the patient images can be segmented before surgery to highlight desired structures. While segmentation is not strictly necessary before utilizing AR, segmentation can assist in neuronavigation and education.[

Tracking system

The CPS is also connected to the tracking system, allowing the virtual image to be updated as the surgeon’s perspective changes. The system also maintains proper registration, continuously aligning virtual content with fixed locations in three-dimensional (3D) space. Continuous registration depends on physical reference markers (fiducials) to be in constant view of the system’s cameras and sensors. Artificial optical, infrared, and fluorescent markers are the three most common markers used for the tracking. However, emerging techniques are leveraging artificial intelligence-based optical tracking of anatomic structures.[

Most commercially available headsets such as the HoloLens (Microsoft, Redmond WA) and Magic Leap (Magic Leap, Plantation, FL) additionally employ simultaneous localization and mapping (SLAM) algorithms to aid fiducial-based tracking. This entails sensors mounted on the headset, such as a 2D RGB camera, infrared camera, environmental cameras, and an inertial measurement unit (which includes an accelerometer, gyroscope, and magnetometer), feeding information about the position and orientation of the HMD relative to physical space (e.g., walls, stationary objects) into the SLAM system. These inputs help identify anchor points in the environment to which HMD movement in physical space is compared and measured. The accuracy of this environmental mapping impacts directly on the ability to track anatomy or tools within the surgical site.

Technical limitations of AR

Optical error

The advancement of AR in surgical applications is dependent on continued advancement in environmental and artifact tracking. Most techniques used to register and track patient anatomy and surgical tools leverage monocular RGB images and video streams to detect and track planar fiducial markers.[

This approach introduces inaccuracies at several stages. First, the resolution of even the most sophisticated cameras is finite. Thus, the camera itself produces an approximation of the actual scene, and specifically the fiducial markers. Low-fidelity camera views can reduce the accuracy of the computer vision techniques to approximate the relative fiducial position. Second, if a fiducial is occluded or obfuscates the ability for the camera to see the planer marker, the system will lose the ability to track the fiducial. Many systems anticipate this behavior using multiple fiducial markers. This itself introduces another layer of inaccuracy, however, with the accumulation of tracking errors for each marker. Third, RGB-based markers require consistent lighting conditions to be properly seen by the cameras. Changes in lighting conditions will impact the ability of computer vision systems to translate fiducial characteristics to known geometry. Recent approaches mitigate the challenges of limited resolution by adding information from depth cameras. This additional dimension of information can increase the capabilities to determine the location of the fiducial in 3D space.[

Location estimation error

Techniques used to determine position and orientation in physical space are based on stochastic localization techniques (e.g., SLAM) that rely on unique visual or perspective details from camera and sensor data. These “features” must contain enough texture or variance in visual appearance to be observed consistently. Smooth surfaces, or those with minimal contrasting details, present performance challenges for these techniques. Furthermore, as the HMD’s camera and sensors move or when perspective extremes are seen, these may impact repositioning and thus the ability to tract these features. The resulting location estimations can have high variability in accuracy that can translate to registration and placement of virtual content in the AR-HMD.

Future directions

Future approaches will likely involve direct tracking of anatomy and tools by utilizing more sophisticated, rapidly evolving approaches for tracking, localizing, recognizing, and/or aligning models and physical objects. For instance, rather than detecting the fiducial marker on a tool, computer vision could be used to detect, localize, and track the tool itself. This could reduce errors from occlusion and provide better registration in global coordinates. Approaches that leverage patient anatomy recognition for registration are especially difficult. These techniques must understand complex shapes with limited views and adapt not only to feature occlusion but also to changes in pose and distortion due to the surgical activity. Despite this, there are significant opportunities for AR in neurosurgery. As technology continues to evolve, AR will most likely become the superior navigational platform as it may have enhanced accuracy and precision.

To complement advances in tracking, future efforts should also advance the communication and interaction between the surgeon and the suite of assistive technologies. It is unlikely that any practical, scalable tracking technique will achieve sub-millimeter precision. A natural next step in the evolution of AR tools is real-time communication with the surgeon about overall system performance and tracking performance, allowing surgeons to understand better the reliability of the information provided. Modern geo-location user experiences already model such interaction approaches; for example, smartphone maps do not show pinpoint location but rather “halos” of confidence based on current environmental conditions. Surgical guidance tools should provide surgeons with similar feedback on tracking margins and approaches to the operative task that minimize tracking error.

HUD versus HMD systems

Although microscope-based HUD with AR is frequently used in neurovascular surgery, they have a fixed and limited field of view. They also cannot integrate with other data streams in the OR. In contrast, AR-HMD headsets are still struggling to capture microscopic structures at a level comparable to HUD headsets. In addition, AR headsets can be challenging to use for longer procedures since the surgeon carries the device’s weight, in contrast to the microscope.[

Applications of AR in neurosurgery

Neurosurgical oncology

AR can accurately display holographic renderings of tumors directly onto the surgeon’s operating view. This makes AR ideal for both preoperative planning and intraoperative surgical guidance. AR allows for easier and faster incision and craniotomy planning compared to standard monitor-based navigation.[

This interactive digital projection also allows the surgeon to visualize the entire tumor during resection, even with obstructions. Furthermore, data from multiple imaging modalities can be combined into one projection to allow visualization of critical structures such as deep nuclei and white matter tracts in conjunction with intraoperative 5-ALA fluorescence.[

Early studies suggest that AR-based neuronavigation may increase the extent of safe surgical tumor resection from eloquent areas compared to standard frameless neuronavigation. This may be due to the ability of the AR system’s hardware to recognize brain volume adjustments from brain shifts using continual reregistration algorithms.[

Cerebrovascular

AR has been used successfully in cerebrovascular surgery for intraoperative navigation during the treatment of numerous pathologies. One of the first studies evaluating AR in neurovascular procedures utilized a system with an overlay of aneurysm morphology segmented from preoperative imaging projected through a microscope HUD to guide clip placement. Cabrilo et al. found that the AR overlay was useful in clip placement in 92% of cases (n = 33/39).[

AR systems can also be utilized to identify the topology, angioarchitecture, and location of critical vessels intraoperatively without the use of injected dyes.[

AR has been used as an adjunct during superficial temporal artery to middle cerebral artery bypass surgery. Rychen et al. found that AR was useful in guiding dissection of the highly variable and tortuous STA and also aided in sparing the middle meningeal artery during the craniotomy, all while increasing the confidence of the operating surgeon.[

Spine

AR is an ideal system for intraoperative guidance in spine surgery, as single trajectories can be displayed to guide pedicle cannulation and screw placement accurately. Conventionally, pedicle screw placement may be done freehand, with fluoroscopy, or with screen-based navigation. AR’s advantage in this example includes complex pedicle orientations which are easier to cannulate (such as scoliosis), complication avoidance (such as neurovascular injury and reduced radiation exposure), and decreased surgical time.[

AR-based navigation circumvents many of the above guidance concerns with traditional techniques. Molina et al., using the Xvision Spine System (Augmedics, Arlington Heights, IL), found that AR’s use in pedicle screw placement in cadaveric spine specimens had a 96% insertion accuracy on the HearyGertzbein scale when screws were placed from T6 to L5 on cadaveric specimens compared with the freehand, manual computer navigated, and robotic-assisted rates found in the literature.[

Non-surgical applications of AR

Applications of AR in resident education

AR has the potential to provide residents with a relatively risk-free simulation environment in which residents can supplement skills developed in the OR.[

The Perk Tutor system was one of the earliest AR-specific systems evaluated for neurosurgery trainees practicing spinal procedures.[

The use of an AR-HMD is also useful in helping residents train for cranial procedures such as burr-hole localization. Residents who used the HoloLens AR system on holographic models had significantly lower drill angle errors when compared with residents who used either 2D CT/magnetic resonance imaging images or 3D neuronavigational systems.[

Applications of AR in telecommunication and telementoring during surgery

AR-HMD can provide live streaming and teleconsultation, allowing remote assistance during operations.[

Similarly, AR facilitates remote instruction of neurosurgeons in a process known as telementoring. Up to 5 million people each year do not have access to safe and affordable neurosurgical interventions, and those in low- and middle-income countries are disproportionately affected.[

Applications of AR on patient education

Most written resources describing neurosurgical procedures and conditions are written at a reading level above what the U.S. Centers for Disease Control and Prevention recommends. In addition to this, nearly a quarter of patients have poor health literacy.[

Figure 2:

Multiple paradigm-altering uses of augmented reality (AR) in neurosurgery. Neurosurgical applications of AR currently consist largely of intraoperative navigation but have numerous future uses as well. (a) Preoperative planning in cranial neurosurgery. AR allows seamless merging of patient imaging with the intraoperative field. Once the operating begins, the external screen is not necessary. (b) Neuronavigation in spinal neurosurgery. The operator can place spinal pedicle screws without looking at an external screen while cosurgeons and observers can watch on their own AR headset or an external screen. (c) AR in trainee education. In contrast to the usual 2D lecture environment, learners can interactively explore three-dimensional anatomy and even simulate surgery. (d) Tele-robotic surgery. With future development of robotic technologies and haptic feedback, the surgeon can remotely perform basic neurosurgical procedures, permitting greater access and dissemination of life-saving neurosurgical care. (e) AR in patient education. Neurosurgeons can interactively demonstrate and explain surgical procedures in simple visual terms to patients, promoting greater patient understanding and simplifying the counseling and informed consent process. Credit: Jim Sweat.

CONCLUSION

AR in neurosurgery is rapidly evolving and has significant promise. Surgically, AR has primarily been used for neuronavigation by allowing surgeons to overlay images from various modalities directly onto the surgical field. This results in a more multimodal exploration of the unique anatomy of each patient and potentially increases the accuracy and precision of neurosurgical procedures. Second, AR is a powerful educational tool for both trainees and patients, allowing for guided mentorship and simulation for the former and immersive and lucid communication for the latter. Finally, AR-based telementoring and telecommunication can help bring advanced neurosurgical procedures to underserved regions. While considerable progress has been made in AR optical and fiducial-based tracking, several technological limitations still exist particularly regarding optical and location estimation error. As imaging technologies continue to advance, the usage of AR in neurosurgery will become widespread. It is, therefore, critical for industry and academia to collaborate in addressing these various technological barriers and facilitate the full transition of AR into the OR.

Ethical approval

The Institutional Review Board approval is not required.

Declaration of patient consent

Patient’s consent not required as there are no patients in this study.

Financial support and sponsorship

The Tull Family Foundation.

Conflicts of interest

There are no conflicts of interest

Use of artificial intelligence (AI)-assisted technology for manuscript preparation

The authors confirm that there was no use of artificial intelligence (AI)-assisted technology for assisting in the writing or editing of the manuscript and no images were manipulated using AI.

Disclaimer

The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of the Journal or its management. The information contained in this article should not be considered to be medical advice; patients should consult their own physicians for advice as to their specific medical needs.

Acknowledgments

The Tull Family Foundation – whose generous donation supported this research.

Austin Anthony, MD; Jeffrey R. Head, MD; Lucille Cheng, BA; Hussam Abou-Al-Shaar, MD; and Joseph C. Maroon, MD – for their assistance in compiling this manuscript.

Jim Sweat, for his medical illustrations.

References

1. Armstrong DG, Rankin TM, Giovinco NA, Mills JL, Matsuoka Y. A heads-up display for diabetic limb salvage surgery: A view through the google looking glass. J Diabetes Sci Technol. 2014. 8: 951-6

2. Auquier P, Pernoud N, Bruder N, Simeoni MC, Auffray JP, Colavolpe C. Development and validation of a perioperative satisfaction questionnaire. Anesthesiology. 2005. 102: 1116-23

3. Barsom EZ, Graafland M, Schijven MP. Systematic review on the effectiveness of augmented reality applications in medical training. Surg Endosc. 2016. 30: 4174-83

4. Baum ZM, Lasso AR, Ungi T, Rae E, Zevin B, Levy R. Augmented reality training platform for neurosurgical burr hole localization. J Med Robot Res. 2019. 4: 1942001

5. Bekelis K, Calnan D, Simmons N, MacKenzie TA, Kakoulides G. Effect of an immersive preoperative virtual reality experience on patient reported outcomes: A randomized controlled trial. Ann Surg. 2017. 265: 1068-73

6. Besharati Tabrizi L, Mahvash M. Augmented reality-guided neurosurgery: Accuracy and intraoperative application of an image projection technique. J Neurosurg. 2015. 123: 206-11

7. Boyaci MG, Fidan U, Yuran AF, Yildizhan S, Kaya F, Kimsesiz O. Augmented reality supported cervical transpedicular fixation on 3D-printed vertebrae model: An experimental education study. Turk Neurosurg. 2020. 30: 937-43

8. Cabrilo I, Bijlenga P, Schaller K. Augmented reality in the surgery of cerebral aneurysms: A technical report. Neurosurgery. 2014. 10: 252-60 discussion 260-51

9. Cannizzaro D, Zaed I, Safa A, Jelmoni AJ, Composto A, Bisoglio A. Augmented reality in neurosurgery, state of art and future projections. A systematic review. Front Surg. 2022. 9: 864792

10. Chen J, Kumar S, Shallal C, Leo KT, Girard A, Bai Y. Caregiver preferences for three-dimensional printed or augmented reality craniosynostosis skull models: A cross-sectional survey. J Craniofac Surg. 2022. 33: 151-5

11. Cho J, Rahimpour S, Cutler A, Goodwin CR, Lad SP, Codd P. Enhancing reality: A systematic review of augmented reality in neuronavigation and education. World Neurosurg. 2020. 139: 186-95

12. Contreras Lopez WO, Navarro PA, Crispin S. Intraoperative clinical application of augmented reality in neurosurgery: A systematic review. Clin Neurol Neurosurg. 2019. 177: 6-11

13. Davidovic A, Chavaz L, Meling TR, Schaller K, Bijlenga P, Haemmerli J. Evaluation of the effect of standard neuronavigation and augmented reality on the integrity of the perifocal structures during a neurosurgical approach. Neurosurg Focus. 2021. 51: E19

14. Durrani S, Onyedimma C, Jarrah R, Bhatti A, Nathani KR, Bhandarkar AR. The virtual vision of neurosurgery: How augmented reality and virtual reality are transforming the neurosurgical operating room. World Neurosurg. 2022. 168: 190-201

15. Felix B, Kalatar SB, Moatz B, Hofstetter C, Karsy M, Parr R. Augmented reality spine surgery navigation: Increasing pedicle screw insertion accuracy for both open and minimally invasive spine surgeries. Spine (Phila Pa 1976). 2022. 47: 865-72

16. Fiani B, De Stefano F, Kondilis A, Covarrubias C, Reier L, Sarhadi K. Virtual reality in neurosurgery: “Can you see it?”-a review of the current applications and future potential. World Neurosurg. 2020. 141: 291-8

17. Fida B, Cutolo F, di Franco G, Ferrari M, Ferrari V. Augmented reality in open surgery. Updates Surg. 2018. 70: 389-400

18. Gertzbein SD, Robbins SE. Accuracy of pedicular screw placement in vivo. Spine (Phila Pa 1976). 1990. 15: 11-4

19. Gibby W, Cvetko S, Gibby A, Gibby C, Sorensen K, Andrews EG. The application of augmented reality-based navigation for accurate target acquisition of deep brain sites: Advances in neurosurgical guidance. J Neurosurg. 2021. 137: 1-7

20. Godzik J, Farber SH, Urakov T, Steinberger J, Knipscher LJ, Ehredt RB. “Disruptive technology” in spine surgery and education: Virtual and augmented reality. Oper Neurosurg (Hagerstown). 2021. 21: S85-93

21. Grosch AS, Schroder T, Schroder T, Onken J, Picht T. Development and initial evaluation of a novel simulation model for comprehensive brain tumor surgery training. Acta Neurochir (Wien). 2020. 162: 1957-65

22. Haemmerli J, Davidovic A, Meling TR, Chavaz L, Schaller K, Bijlenga P. Evaluation of the precision of operative augmented reality compared to standard neuronavigation using a 3D-printed skull. Neurosurg Focus. 2021. 50: E17

23. Heary RF, Bono CM, Black M. Thoracic pedicle screws: Postoperative computerized tomography scanning assessment. J Neurosurg. 2004. 100: 325-31

24. Hecht R, Li M, de Ruiter QM, Pritchard WF, Li X, Krishnasamy V. Smartphone augmented reality CT-based platform for needle insertion guidance: A phantom study. Cardiovasc Intervent Radiol. 2020. 43: 756-64

25. Higginbotham G. Virtual connections: Improving global neurosurgery through immersive technologies. Front Surg. 2021. 8: 629963

26. Hiranaka T, Nakanishi Y, Fujishiro T, Hida Y, Tsubosaka M, Shibata Y. The use of smart glasses for surgical video streaming. Surg Innov. 2017. 24: 151-4

27. Huang KT, Ball C, Francis J, Ratan R, Boumis J, Fordham J. Augmented versus virtual reality in education: An exploratory study examining science knowledge retention when using augmented reality/virtual reality mobile applications. Cyberpsychol Behav Soc Netw. 2019. 22: 105-10

28. Keri Z, Sydor D, Ungi T, Holden MS, McGraw R, Mousavi P. Computerized training system for ultrasound-guided lumbar puncture on abnormal spine models: A randomized controlled trial. Can J Anaesth. 2015. 62: 777-84

29. Kersten-Oertel M, Gerard I, Drouin S, Mok K, Sirhan D, Sinclair DS. Augmented reality in neurovascular surgery: Feasibility and first uses in the operating room. Int J Comput Assist Radiol Surg. 2015. 10: 1823-36

30. Kovoor JG, Gupta AK, Gladman MA. Validity and effectiveness of augmented reality in surgical education: A systematic review. Surgery. 2021. 170: 88-98

31. Lee MH, Lee TK. Cadaver-free neurosurgical simulation using a 3-dimensional printer and augmented reality. Oper Neurosurg (Hagerstown). 2022. 23: 46-52

32. Li Y, Chen X, Wang N, Zhang W, Li D, Zhang L. A wearable mixed-reality holographic computer for guiding external ventricular drain insertion at the bedside. J Neurosurg. 2018. 131: 1-8

33. Liebmann F, Roner S, von Atzigen M, Scaramuzza D, Sutter R, Snedeker J. Pedicle screw navigation using surface digitization on the Microsoft HoloLens. Int J Comput Assist Radiol Surg. 2019. 14: 1157-65

34. Lo S, Chapman P. The first worldwide use and evaluation of augmented reality (AR) in “Patient information leaflets” in plastic surgery. J Plast Reconstr Aesthet Surg. 2020. 73: 1357-404

35. Louis RG, Steinberg GK, Duma C, Britz G, Mehta V, Pace J. Early experience with virtual and synchronized augmented reality platform for preoperative planning and intraoperative navigation: A case series. Oper Neurosurg (Hagerstown). 2021. 21: 189-96

36. Meola A, Cutolo F, Carbone M, Cagnazzo F, Ferrari M, Ferrari V. Augmented reality in neurosurgery: A systematic review. Neurosurg Rev. 2017. 40: 537-48

37. Mikhail M, Mithani K, Ibrahim GM. Presurgical and intraoperative augmented reality in neuro-oncologic surgery: Clinical experiences and limitations. World Neurosurg. 2019. 128: 268-76

38. Molina CA, Theodore N, Ahmed AK, Westbroek EM, Mirovsky Y, Harel R. Augmented reality-assisted pedicle screw insertion: A cadaveric proof-of-concept study. J Neurosurg Spine. 2019. 31: 1-8

39. Moult E, Ungi T, Welch M, Lu J, McGraw RC, Fichtinger G. Ultrasound-guided facet joint injection training using Perk Tutor. Int J Comput Assist Radiol Surg. 2013. 8: 831-6

40. Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ. 2021. 372: n71

41. Park JH, Lee JY, Lee BH, Jeon HJ, Park SW. Free-hand cervical pedicle screw placement by using para-articular minilaminotomy: Its feasibility and novice neurosurgeons’ experience. Global Spine J. 2021. 11: 662-8

42. Perez-Pachon L, Sharma P, Brech H, Gregory J, Lowe T, Poyade M. Effect of marker position and size on the registration accuracy of HoloLens in a non-clinical setting with implications for high-precision surgical tasks. Int J Comput Assist Radiol Surg. 2021. 16: 955-66

43. Roessler K, Krawagna M, Dorfler A, Buchfelder M, Ganslandt O. Essentials in intraoperative indocyanine green videoangiography assessment for intracranial aneurysm surgery: Conclusions from 295 consecutively clipped aneurysms and review of the literature. Neurosurg Focus. 2014. 36: E7

44. Rychen J, Goldberg J, Raabe A, Bervini D. Augmented reality in superficial temporal artery to middle cerebral artery bypass surgery: Technical note. Oper Neurosurg (Hagerstown). 2020. 18: 444-50

45. Schneider M, Kunz C, Pal’a A, Wirtz CR, Mathis-Ullrich F, Hlavac M. Augmented reality-assisted ventriculostomy. Neurosurg Focus. 2021. 50: E16

46. Sezer S, Piai V, Kessels RPC, Ter Laan M. Information recall in pre-operative consultation for glioma surgery using actual size three-dimensional models. J Clin Med. 2020. 9: 3660

47. Sharma N, Mallela AN, Shi DD, Tang LW, Abou-Al-Shaar H, Gersey ZC. Isocitrate dehydrogenase mutations in gliomas: A review of current understanding and trials. Neurooncol Adv. 2023. 5: vdad053

48. Shenai MB, Tubbs RS, Guthrie BL, Cohen-Gadol AA. Virtual interactive presence for real-time, long-distance surgical collaboration during complex microsurgical procedures. J Neurosurg. 2014. 121: 277-84

49. Shlobin NA, Clark JR, Hoffman SC, Hopkins BS, Kesavabhotla K, Dahdaleh NS. Patient education in neurosurgery: Part 1 of a systematic review. World Neurosurg. 2021. 147: 202-14.e1

50. Shuhaiber JH. Augmented reality in surgery. Arch Surg. 2004. 139: 170-4

51. Sun GC, Wang F, Chen XL, Yu XG, Ma XD, Zhou DB. Impact of virtual and augmented reality based on intraoperative magnetic resonance imaging and functional neuronavigation in glioma surgery involving eloquent areas. World Neurosurg. 2016. 96: 375-82

52. Toyooka T, Otani N, Wada K, Tomiyama A, Takeuchi S, Fujii K. Head-up display may facilitate safe keyhole surgery for cerebral aneurysm clipping. J Neurosurg. 2018. 129: 883-9

53. Vassallo R, Kasuya H, Lo BW, Peters T, Xiao Y. Augmented reality guidance in cerebrovascular surgery using microscopic video enhancement. Healthc Technol Lett. 2018. 5: 158-61

54. Vavra P, Roman J, Zonca P, Ihnat P, Nemec M, Kumar J. Recent development of augmented reality in surgery: A review. J Healthc Eng. 2017. 2017: 4574172

55. Yavas G, Caliskan KE, Cagli MS. Three-dimensional-printed marker-based augmented reality neuronavigation: A new neuronavigation technique. Neurosurg Focus. 2021. 51: e20

56. Yoon JW, Chen RE, Han PK, Si P, Freeman WD, Pirris SM. Technical feasibility and safety of an intraoperative head-up display device during spine instrumentation. Int J Med Robot. 2017. 13: 3

57. Yuk FJ, Maragkos GA, Sato K, Steinberger J. Current innovation in virtual and augmented reality in spine surgery. Ann Transl Med. 2021. 9: 94